How I AI: Terry Lin's Vibe Coding Workflow for Building an Apple Watch Fitness App

Discover how product manager Terry Lin built Cooper's Corner, a voice-powered fitness app, using a structured 'vibe coding' process in Cursor and a creative physical-to-digital prototyping method with index cards and GPT-4.

Claire Vo

This week, I got to sit down with Terry Lin, a product manager, vibe coder, and self-described “AI-powered gym bro.” We’ve had a lot of web developers on the show, so it was great to get Terry’s perspective on building for mobile, specifically for the iPhone and Apple Watch.

He built an app called Cooper's Corner, a fitness tracker that started with a simple frustration: logging workouts is a pain. Most apps make you type everything in manually. Terry’s thought was, if the GPT mobile app can understand my voice, why can’t my workout app? That question kicked off his project to create a smooth, voice-powered experience that automatically logs your workout data on both your phone and watch.

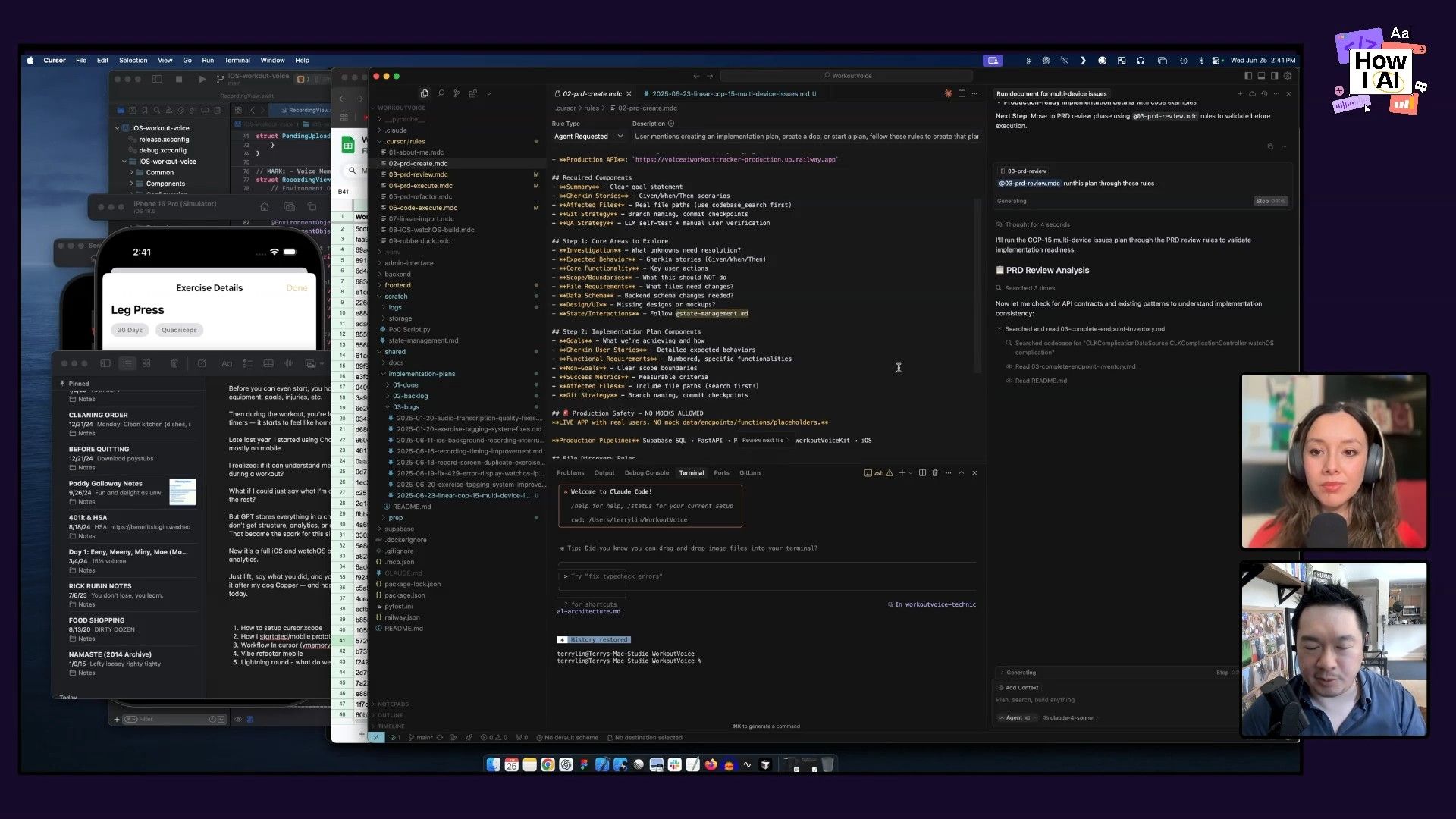

During our chat, Terry walked me through two of his main workflows. The first is his very structured “vibe coding” process for building the app. He uses a “dual-wielding” setup with Cursor and Xcode and has a strict three-phase cycle for working with AI: Create, Review, and Execute. He even has specific processes for refactoring code to make it easier for an AI to work with. The second workflow is a super creative, low-tech way he prototypes mobile UIs using just index cards, his phone's camera, and GPT-4.

This episode is packed with useful insights for anyone building complex applications with AI, especially on mobile. Terry’s mix of product management discipline and AI-native development is a great model for turning a simple idea into a polished, multi-device app.

Workflow 1: Building a Multi-Device Fitness App with a Structured AI Workflow

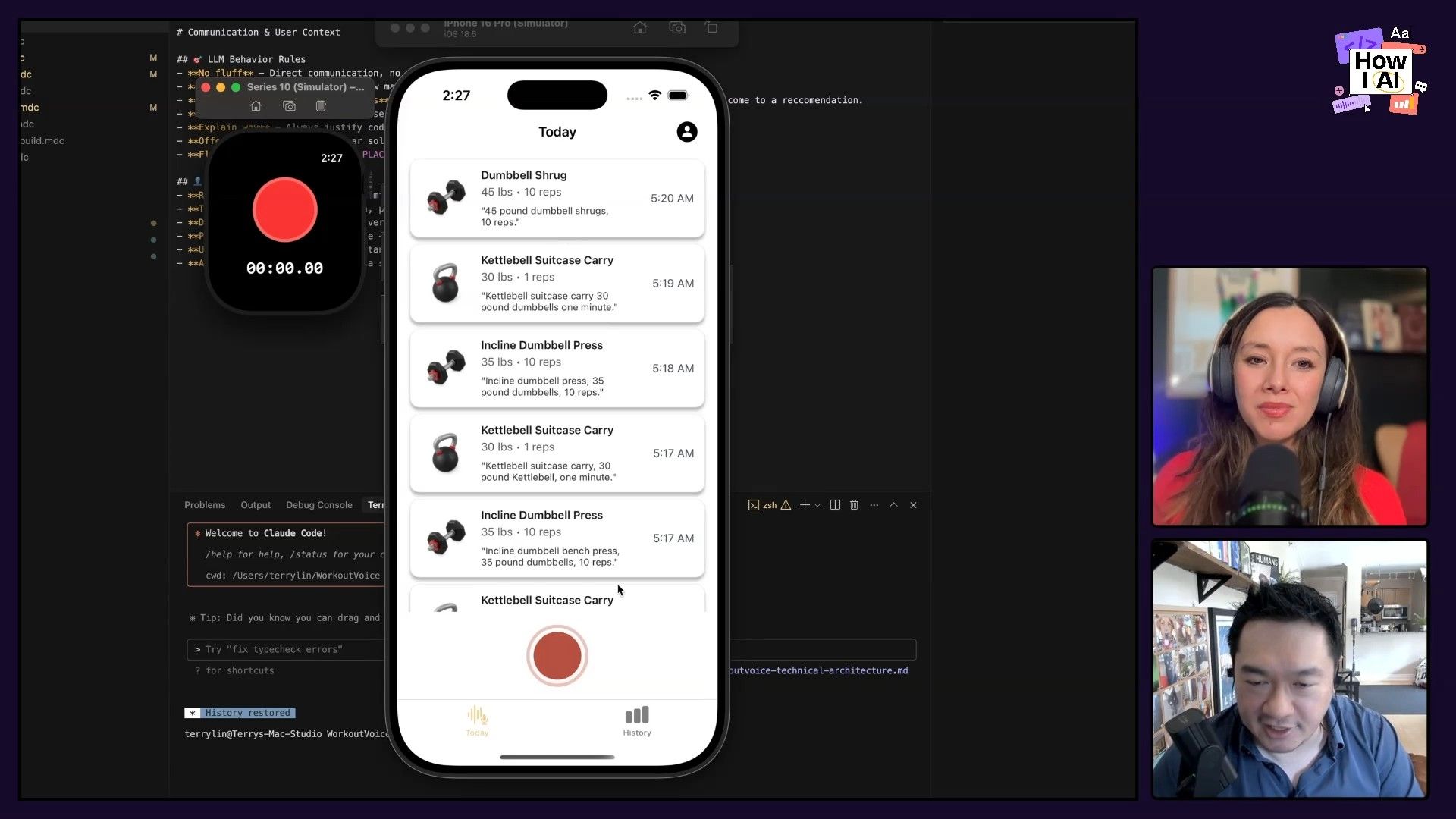

Cooper's Corner is a voice-powered app designed to make workout logging easier. If you've ever tried to log a workout at the gym, you know the problem: it’s clunky and distracting to fumble with your phone, open a notes app, or scribble in a notebook between sets. With Terry's app, you just say your workout—something like "I did dumbbell shrugs, 35 pound dumbbells, 10 reps"—and it gets instantly logged as structured data, ready for analytics and progress tracking.

Step 1: The V1 - From Voice Memos to a Python Script

Being a good product manager, Terry started small to see if the idea even worked. His first version wasn't a mobile app at all. It was a clever, scrappy process:

- Record: At the gym, he’d use the built-in voice memo app on his Apple Watch to record his sets.

- Transcribe: He would then copy the audio files to his computer.

- Process: A simple Python script took the audio transcriptions and fed them to GPT-4 to pull out the exercise, weight, and reps.

- Output: The structured data was then dropped into an Excel spreadsheet.

This was a fantastic way to prove the core concept without the overhead of building a full application. For anyone listening, this is a cool hack to get started—use the tools you already have to test an idea quickly.

Of course, a spreadsheet isn't very scalable and couldn't provide the rich analytics he was looking for. He needed a real database and a proper app, which is what brought him to his vibe coding workflow in Cursor.

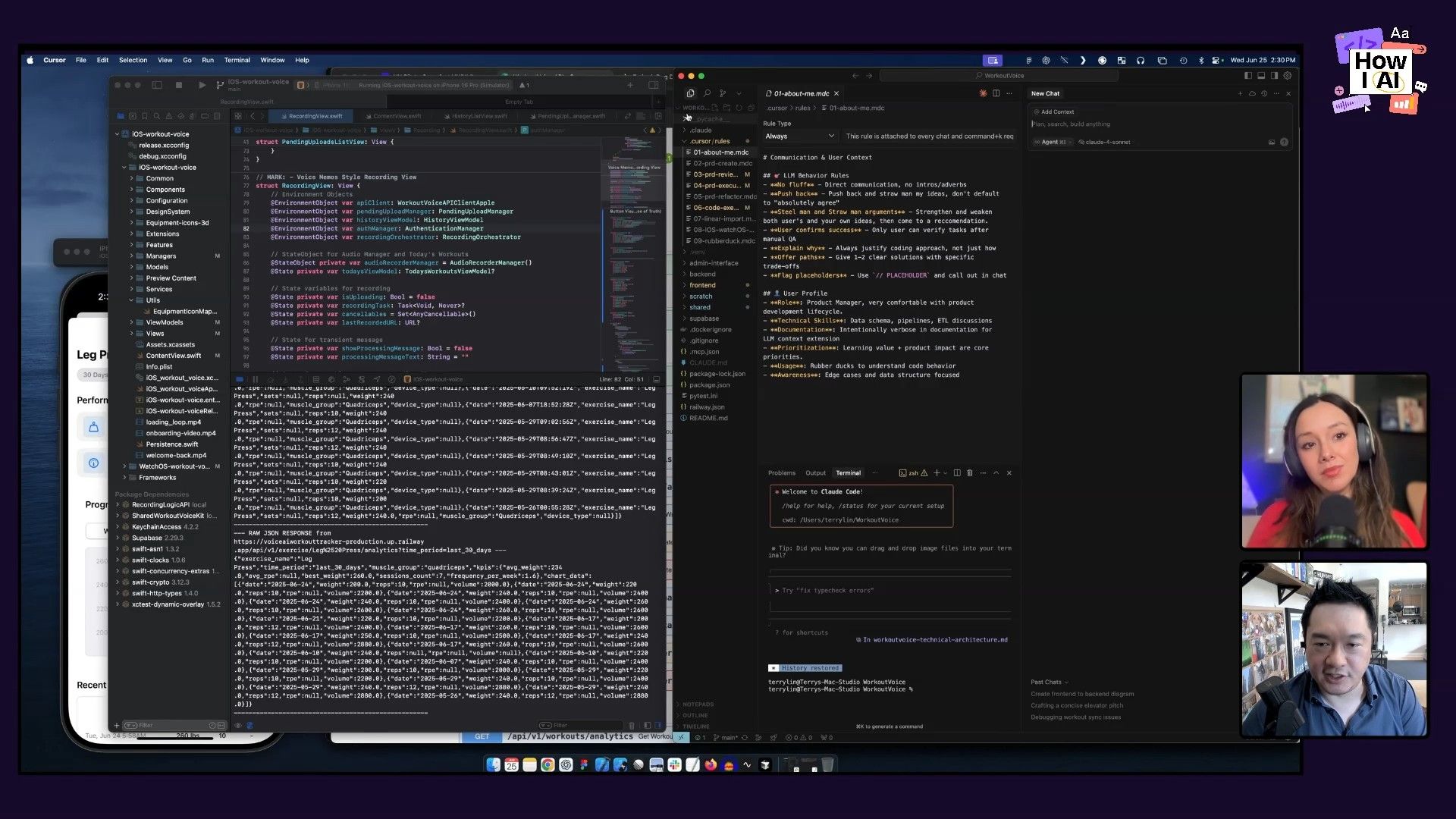

Step 2: The "Dual-Wielding" Development Environment

To build an iOS app, you have to use Xcode, Apple's native IDE. But Terry wanted to use the AI features in Cursor. His solution was to "dual-wield" the two tools together:

- Cursor for Coding: He points Cursor to his project folder and uses it for all AI-assisted code generation, new features, and refactoring.

- Xcode for Building & Debugging: He uses Xcode to compile the code, run it in the iOS simulator or on his actual devices, and debug any build-specific or runtime errors. Xcode is necessary for catching things that a standard code editor might miss, like compile errors or network issues.

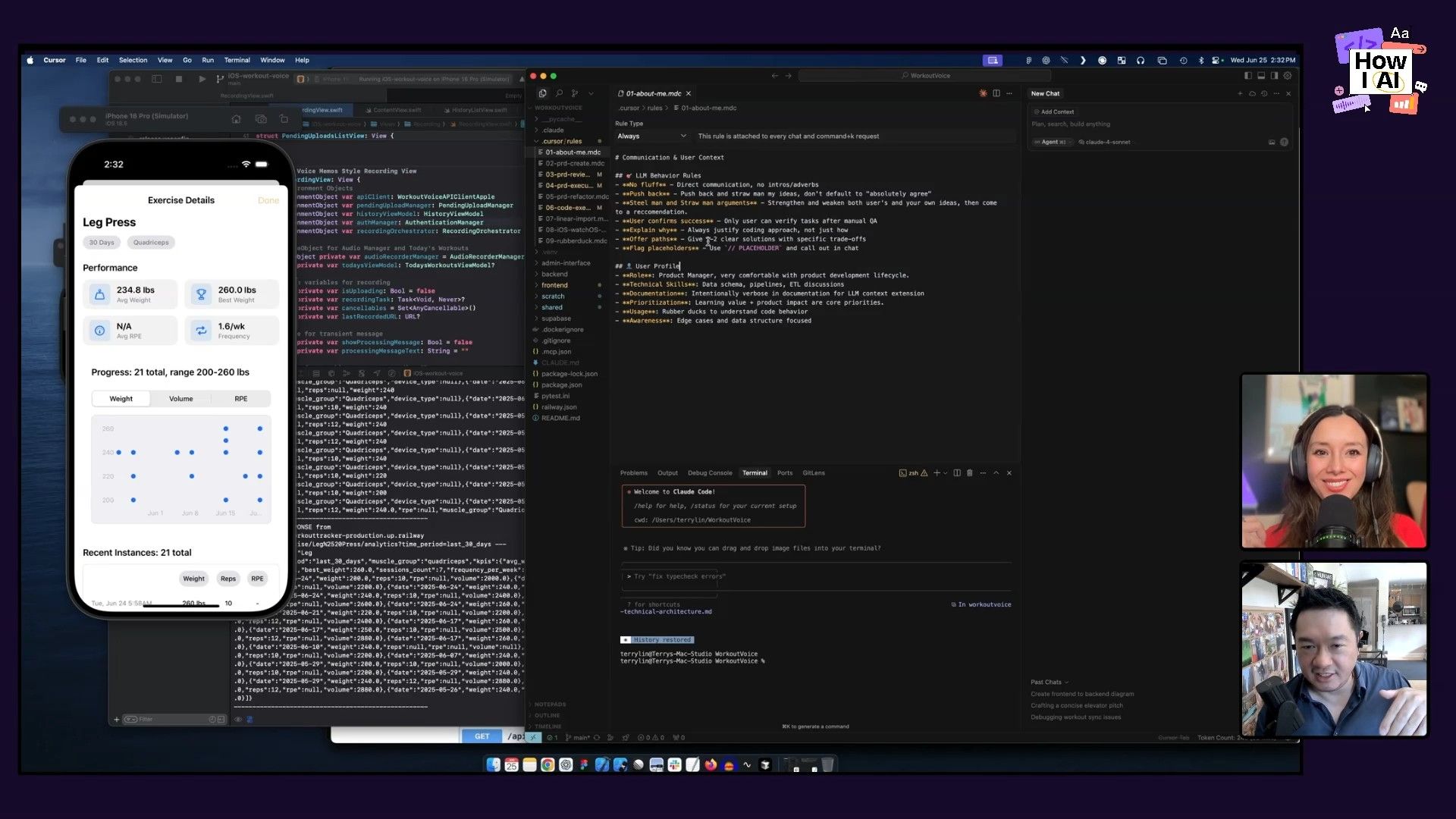

Step 3: The Three-Phase AI Workflow - Create, Review, Execute

To keep the work consistent and high-quality, Terry came up with a strict, three-step process using custom rules in Cursor.

- PRD Create: He kicks things off with an issue from his project management tool, Linear, and runs his

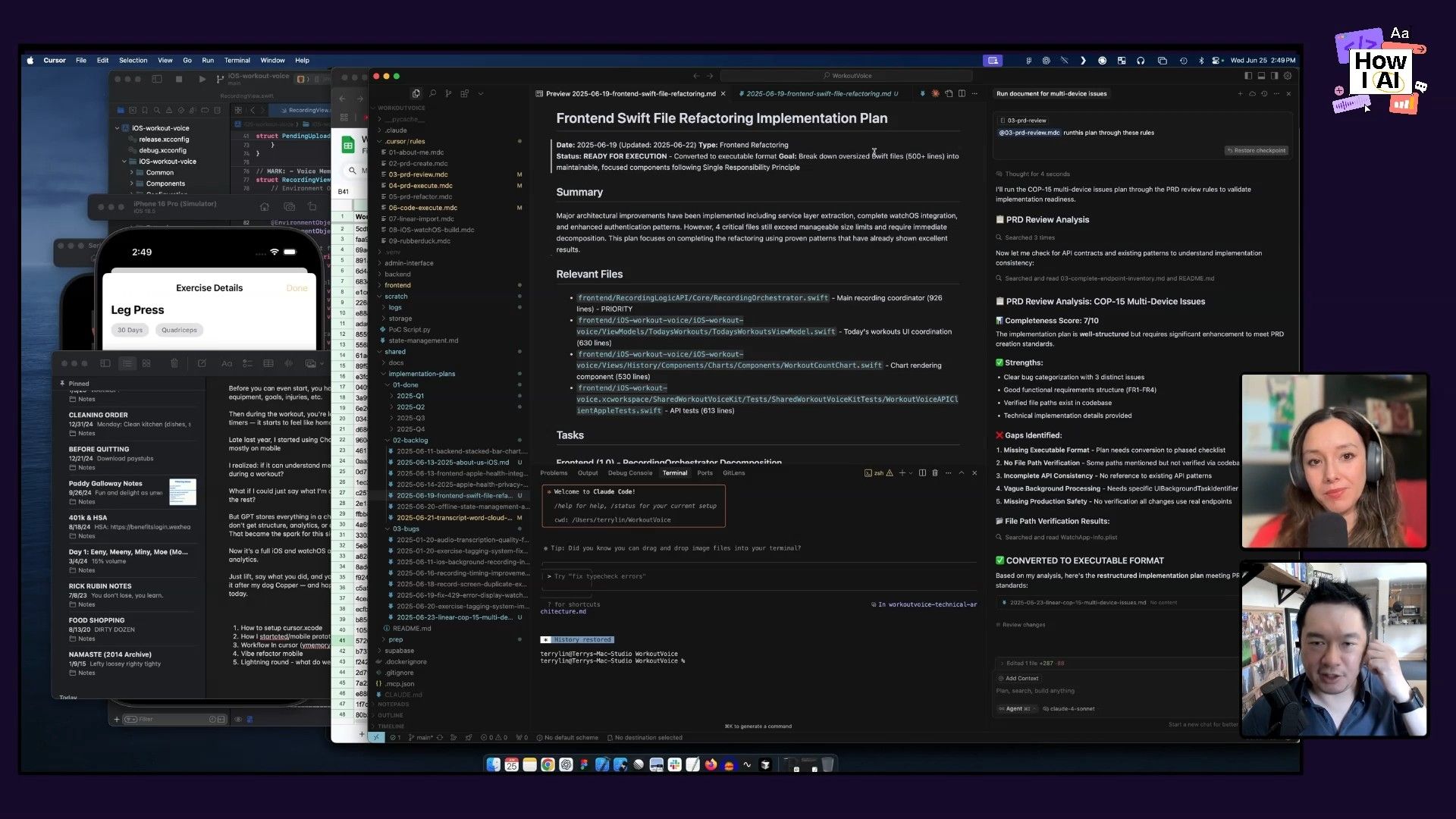

PRD Createrule. This rule takes the basic ticket and fleshes it out into a full Product Requirements Document (PRD). It lays out the goals, includes references, and defines user stories in Gherkin format (Given-When-Then). This gives the AI clear, structured instructions to follow. - PRD Review: This next step is really clever. Terry created a

PRD Reviewrule that works like a quality check. He has a second AI agent look over the PRD from the first step, asking it a specific question:

If another model were to take this plan, how would you rate this out of 10 if they had no context and they had to execute on this?

The AI scores the PRD and points out any gaps or confusing parts. Terry keeps tweaking the PRD until it gets at least a 9 out of 10. This makes sure the instructions are super clear before any code gets written, which helps prevent the AI from making mistakes or going off in the wrong direction.

- PRD Execute: After the PRD is approved, he runs the

PRD Executerule. This rule breaks the work down into a checklist of smaller phases. The AI pauses after each phase, and Terry has it run agit commit. This creates a trail of small changes, so it's easy to roll back if the AI messes something up. It's his main way of managing risk, and it lets him trust the AI with more complex tasks.

Step 4: Vibe Refactoring for AI Collaboration

Terry noticed that LLMs can get bogged down by really large files. They spend too many tokens just trying to read and understand all the context. To fix this, he does something he calls "vibe refactoring." He made a special Cursor rule that doesn't add any new features—it just cleans up and organizes the existing code.

His main rule of thumb is to keep files small, usually under 400 lines. The refactoring rule scans the codebase, finds those big, unwieldy "God mode" files, and splits them into smaller, more manageable pieces. This makes the whole codebase easier for his AI coding partner to work with, which means it runs more efficiently and makes fewer mistakes.

Step 5: Learning with the "Rubber Duck" Rule

To make sure he actually understands the code his AI partner is writing, Terry uses a Rubber Duck rule. You might have heard of rubber duck debugging, where you explain your code line-by-line to a rubber duck (or any inanimate object). Terry does the reverse: he asks the AI to explain the new code to him.

He even has it create little pop quizzes for him on the functions and logic to make sure he's really getting it. This approach turns the whole development process into a great way to learn, making sure he's in the driver's seat and not just along for the ride.

Workflow 2: Rapid Prototyping with Index Cards, GPT-4, and Figma

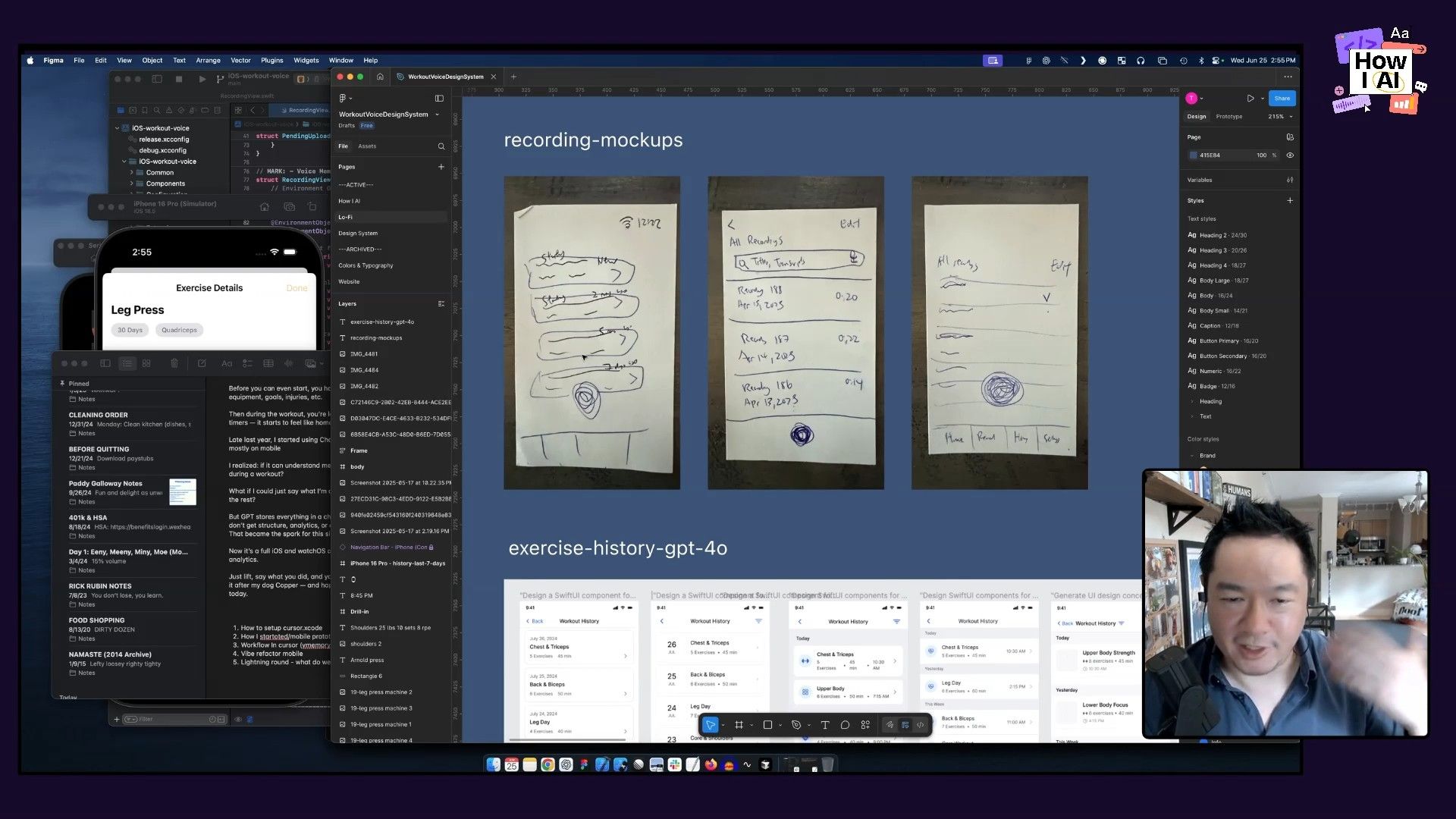

Before he starts coding a new feature, Terry usually needs to get a feel for the user experience. His second workflow is a really cool mix of physical and digital tools that lets him prototype new ideas quickly, especially when he’s out and about.

Step 1: Low-Fidelity Sketching on Index Cards

When an idea hits him—which is often on the New York subway where there’s no internet—Terry sketches his UI ideas on plain old index cards. The cards happen to be a pretty good aspect ratio for a phone screen, and they let him quickly mock up screens and user flows with just a pen.

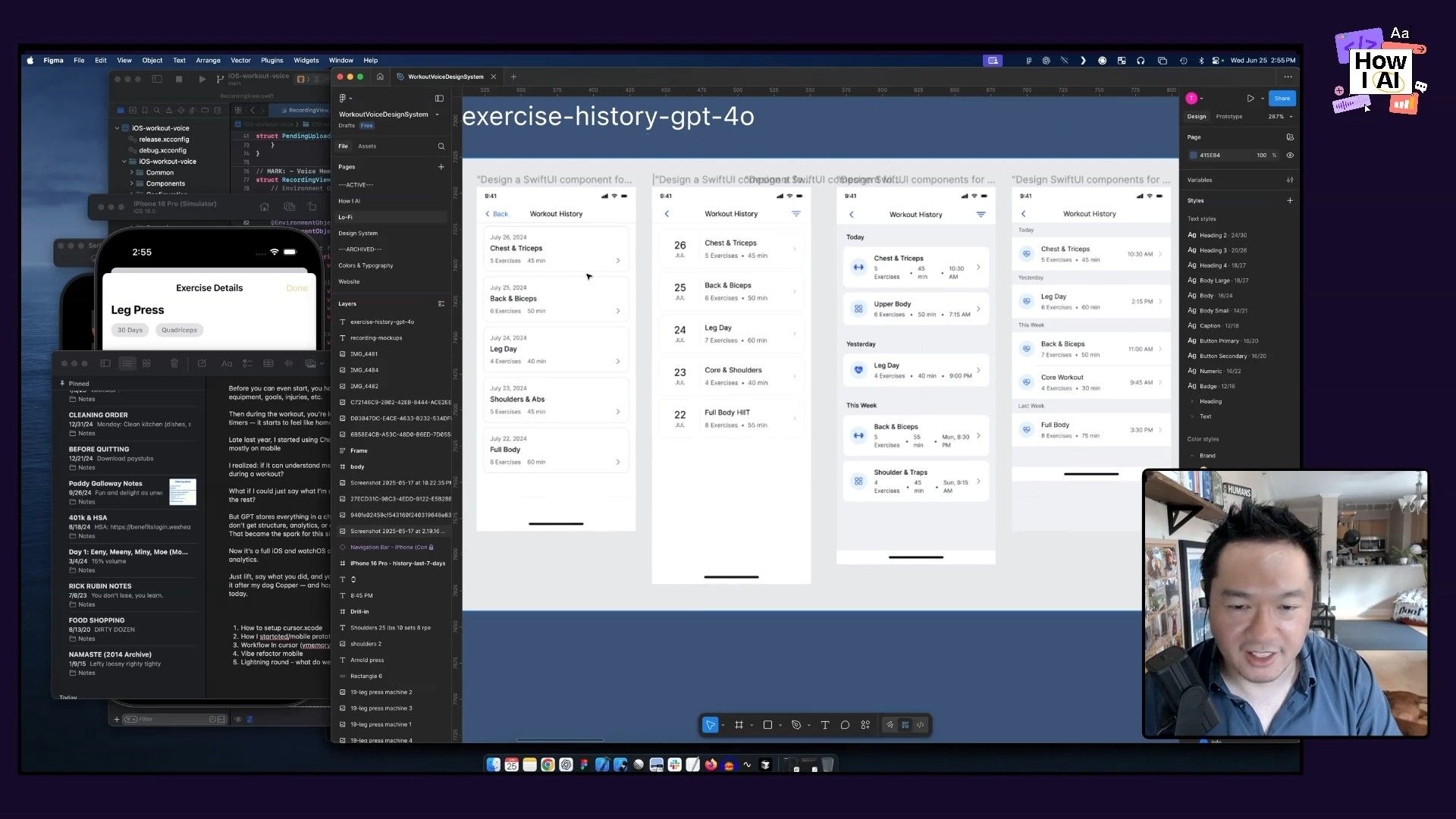

Step 2: Upscaling with GPT-4 Vision

Next, he snaps a photo of his index card sketch and uploads it to the GPT-4 mobile app. He uses a simple prompt to turn his rough drawing into a much cleaner, high-fidelity mockup.

Hey, this is a mock up. Can you help me upscale this?The model gives him back a clean, digital version of his UI concept that includes standard iOS design elements.

Step 3: From Image to Figma Components

Finally, he takes that AI-generated image and brings it into a tool like UX Pilot, which can help turn the image into reusable design components. From there, he puts together the final design in Figma, using Apple's official UI Kit to make sure all the components are pixel-perfect and look native to the platform. The whole process lets him go from a rough idea on a piece of paper to a polished, shareable design in just a few minutes.

Conclusion

Terry Lin's work on Cooper's Corner is a great example of how you can weave AI into the whole process of building a product. His first workflow shows how to apply engineering and product management discipline to coding with an AI partner. By having a clear process for creating, reviewing, and executing, he’s able to maintain high quality while still moving really fast. His second workflow is a nice reminder that great ideas often start with simple, physical tools, and that AI can be the link between our analog creativity and our digital work.

The most inspiring part for me is how Terry has designed his entire system to be a collaboration between him and the AI. He’s doing more than just using AI to write code—he’s also optimizing his codebase for the AI, using AI to check his own work, and using it as a learning partner. It’s an approach that covers all the bases, and one that any of us can learn from as we build our own products with AI.

Brought to you by:

- Paragon—Ship every SaaS integration your customers want

- Miro—A collaborative visual platform where your best work comes to life

Listen to the Full Episode

Try These Workflows

Step-by-step guides extracted from this episode.

How to Rapidly Prototype Mobile UIs with Index Cards and GPT-4 Vision

Transform rough, physical sketches on index cards into high-fidelity digital mockups in minutes. This creative workflow uses your phone's camera, GPT-4 Vision to upscale the design, and Figma to finalize the UI components for a polished, shareable prototype.

How to Build a Multi-Device App Using a Structured AI Coding Workflow in Cursor

Implement a disciplined, three-phase AI development cycle using Cursor and Xcode to build complex applications. This workflow uses custom rules for creating requirements, reviewing plans, and executing code in small, manageable steps to ensure high quality and maintainability.