How I AI: Ryan Carson's 3-Step Playbook for Structured AI Development in Cursor

Five-time founder Ryan Carson reveals his structured playbook for turning 'vibe coding' into a scalable process. Learn his three-file system for generating PRDs and tasks in Cursor, automating browser actions with MCPs, and mastering context with Repo Prompt.

Claire Vo

We're all hearing about 2025 being the year of the "Vibe Coder," where we can just intuitively create amazing things with AI's help. But as many of us are finding out, you can't always vibe your way to a scalable and maintainable product. Sometimes, you actually need a plan.

That's why I was so excited to talk with Ryan Carson, a five-time founder with 20 years of experience building, scaling, and selling startups. Ryan has developed a workflow that brings some much-needed structure to the creative chaos of coding with AI. He's figured out how to guide large language models with the same clarity you'd give a junior developer, making sure he's not just building fast, but building the right things the right way.

In this episode, Ryan walks us through his entire playbook. We get into his three-part system for turning a high-level feature idea into a detailed, AI-generated task list, all inside Cursor. He also shows us how to give AI control over other tools using Machine Control Protocols (MCPs) for things like front-end testing, and how to get surgical control over your context with a tool called Repo Prompt.

Ryan’s philosophy is simple: if you slow down and give the AI proper context, you actually speed everything up in the long run. This episode is for any founder, product manager, or developer who wants to move beyond basic prompts and build real, scalable products with AI.

Workflow 1: From PRD to Executable Tasks in Cursor

The biggest mistake we all make when working with AI is rushing. We get impatient and don't give the AI the context it needs to do its job well. Ryan’s main workflow is designed to fix this by creating a structured, repeatable process for developing features inside Cursor.

The biggest mistake that I do, that everyone does is they try to rush through the context where you just don't have the patience to tell the AI what it actually needs to know to solve your problem. And I think if we all just slow down a tiny bit and do these two steps, it speeds everything up.

He uses a straightforward system of three rule files to guide the AI from a general idea to a complete task list, and then he works through that list one item at a time. You can find his open-source rule files on GitHub.

Step 1: Generating a Product Requirements Document (PRD)

Instead of just telling the AI to "build a feature," Ryan starts by having the AI help him write a PRD. He uses a custom rule file to steer the process.

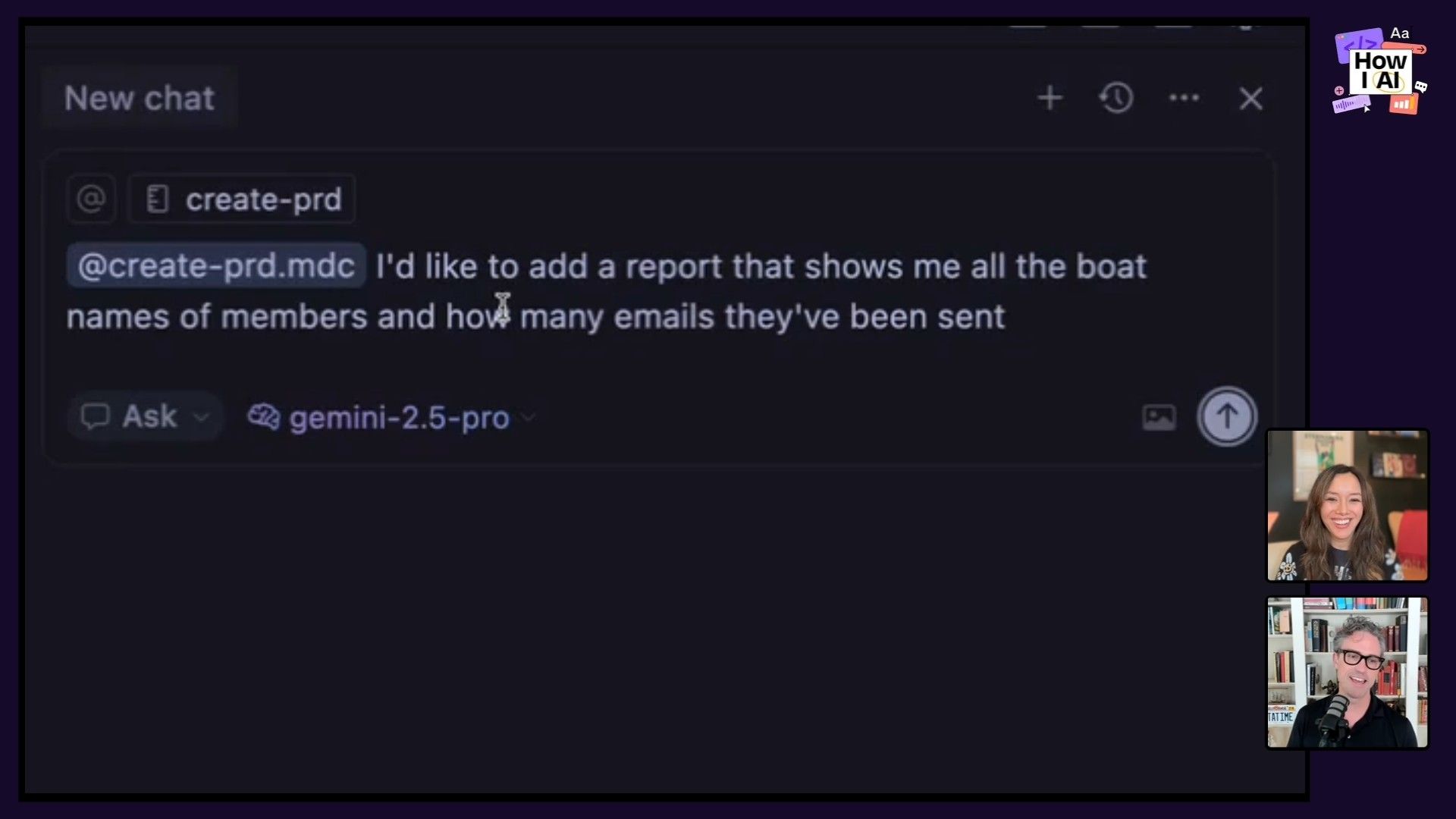

- Create a Rule File: Ryan has a file called

generate_prd.mdin arulesfolder. This file tells the AI how to behave, what a PRD is, and to ask questions to get more detail. A key instruction is to write a PRD that is "suitable for a junior developer to understand," which helps keep the output clear and direct. - Invoke the Rule: In a new chat window in Cursor, he

@-includesthe rule file and gives his high-level request.

@rules/generate_prd.md I'd like to add a report that shows me all the boat names of members and how many emails they've been sent.

- Answer Clarifying Questions: The AI, following its instructions, asks a series of questions to flesh out the PRD (e.g., "What is the problem this report is trying to solve?", "Who specifically would be using the report?"). Ryan answers these to give the AI all the necessary context.

- Generate the PRD: The AI then generates a complete

PRD.mdfile and puts it in atasksfolder. This document covers things like Functional Requirements, Non-Goals, and Technical Considerations.

Step 2: Creating a Detailed Task List

With a solid PRD in hand, the next step is to break it down into an actual engineering plan.

- Use the Generate Tasks Rule: Ryan uses another rule file,

generate_tasks.md, which defines how to create a step-by-step task list based on a PRD. - Provide Context: He

@-includesboth thegenerate_tasks.mdrule and thePRD.mdfile that was just created.

@rules/generate_tasks.md Please generate the tasks for @tasks/PRD.md- Review and Approve: The AI first proposes a high-level plan and asks for approval before getting into the sub-tasks. This human-in-the-loop step makes sure the plan is headed in the right direction.

- Generate the Full Task List: Once Ryan gives the okay, the AI creates a detailed

TASKS.mdfile with a nested structure of parent tasks, sub-tasks, and even sub-sub-tasks, all formatted with Markdown checkboxes.

This process alone saves a ton of time by automating the often-tedious work of breaking down a PRD into engineering tickets.

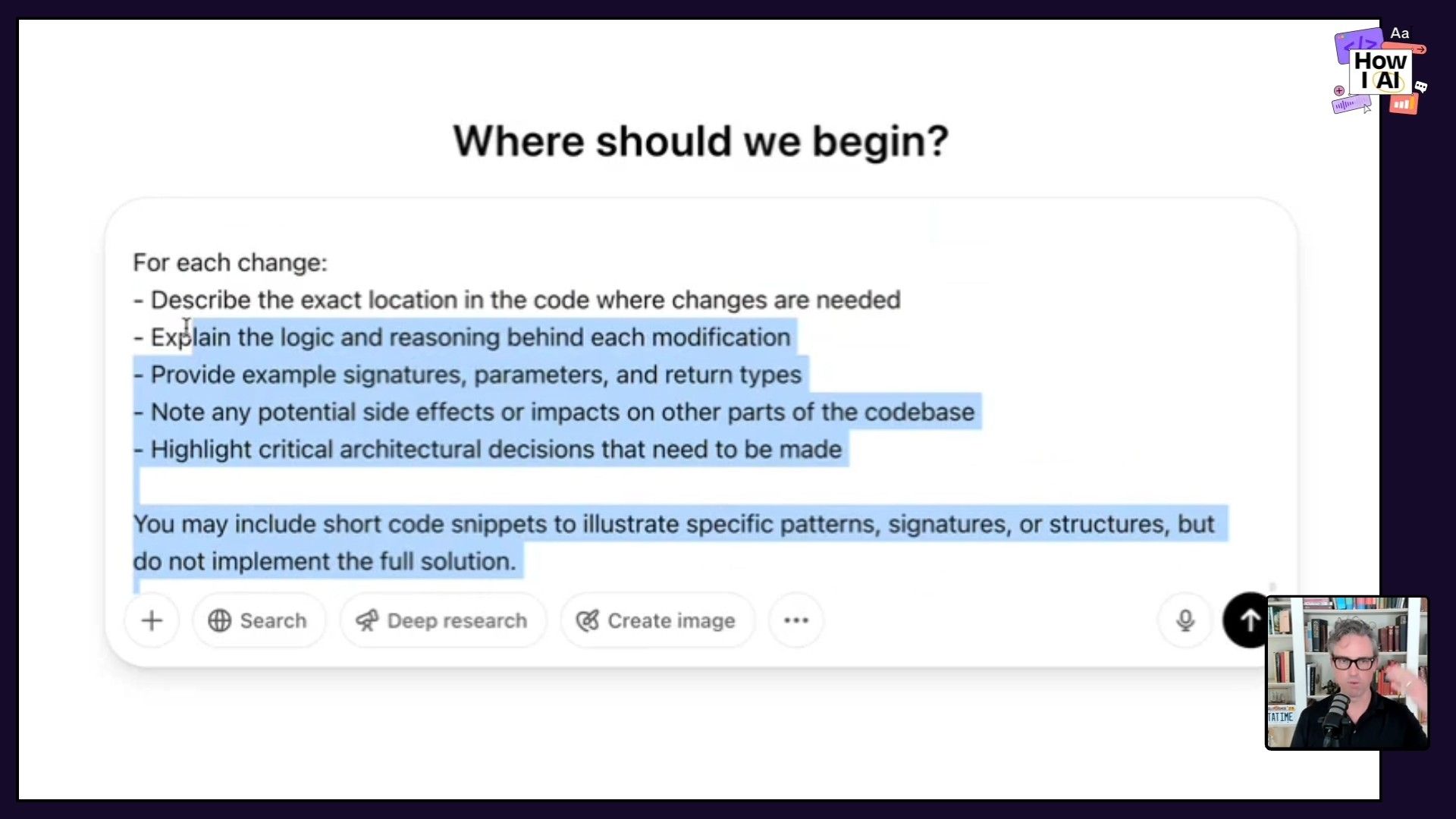

Step 3: Executing Tasks Systematically

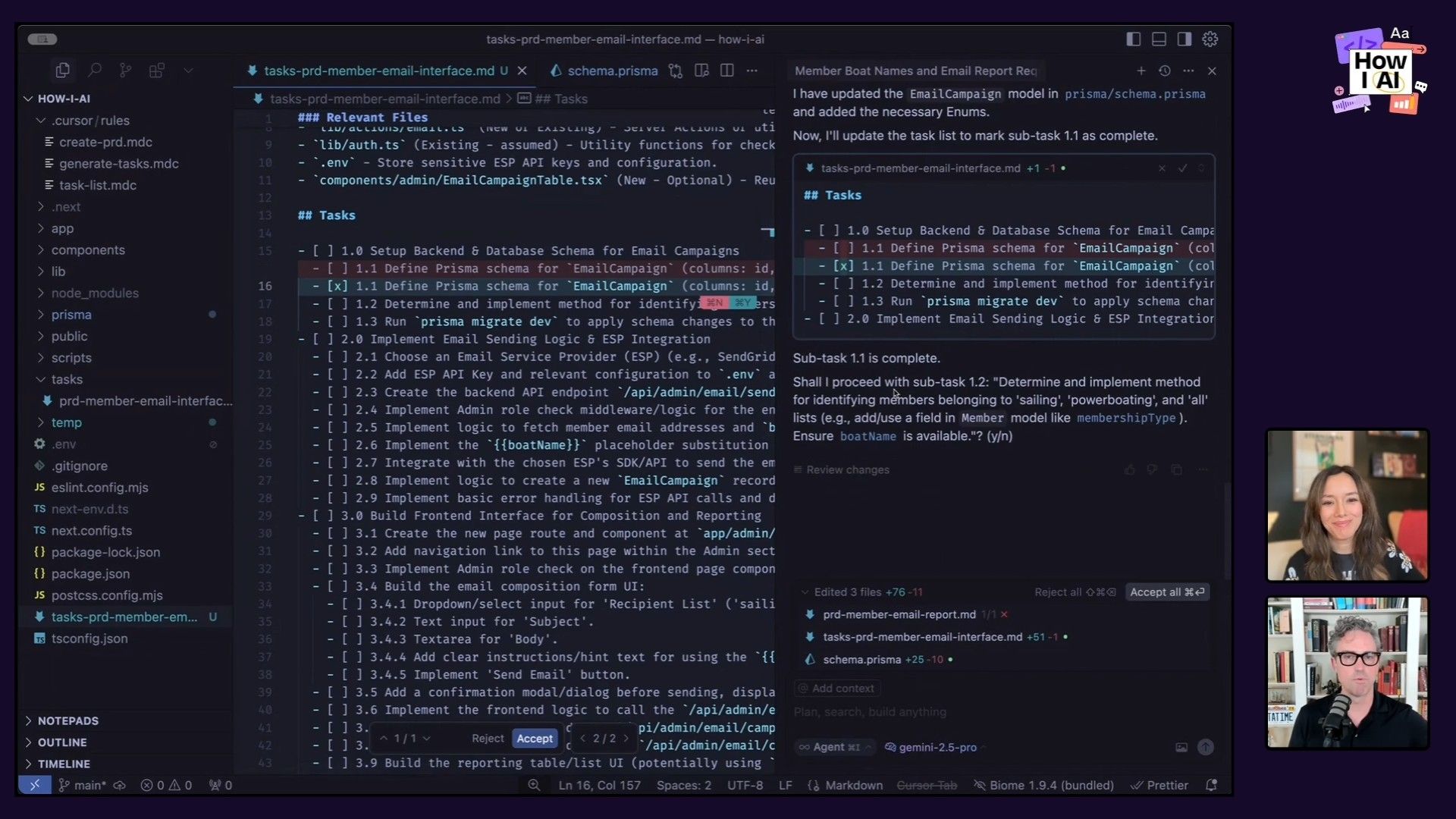

This is where it all comes together. Ryan uses a third rule, task_list_management.md, to have the AI actually work through the task list it just generated.

- Start the Work: He

@-includesthe management rule file along with theTASKS.mdfile.

@rules/task_list_management.md Let's start @tasks/TASKS.md- One Task at a Time: The AI reads the task list and starts with the very first sub-task (e.g., "1.1 Define Prisma schema for Email Campaign"). It then makes the required code changes.

- Mark as Complete and Pause: After finishing the sub-task, the AI automatically edits the

TASKS.mdfile to check off the box[x]. Then, it stops and waits for Ryan's go-ahead. He simply types "yes" or "y" to tell it to move on to the next item.

This back-and-forth, one-step-at-a-time process is what makes the whole system so reliable. It lets Ryan catch small errors, linter issues, or other mistakes before they become bigger problems, which means he can build large, complex features correctly.

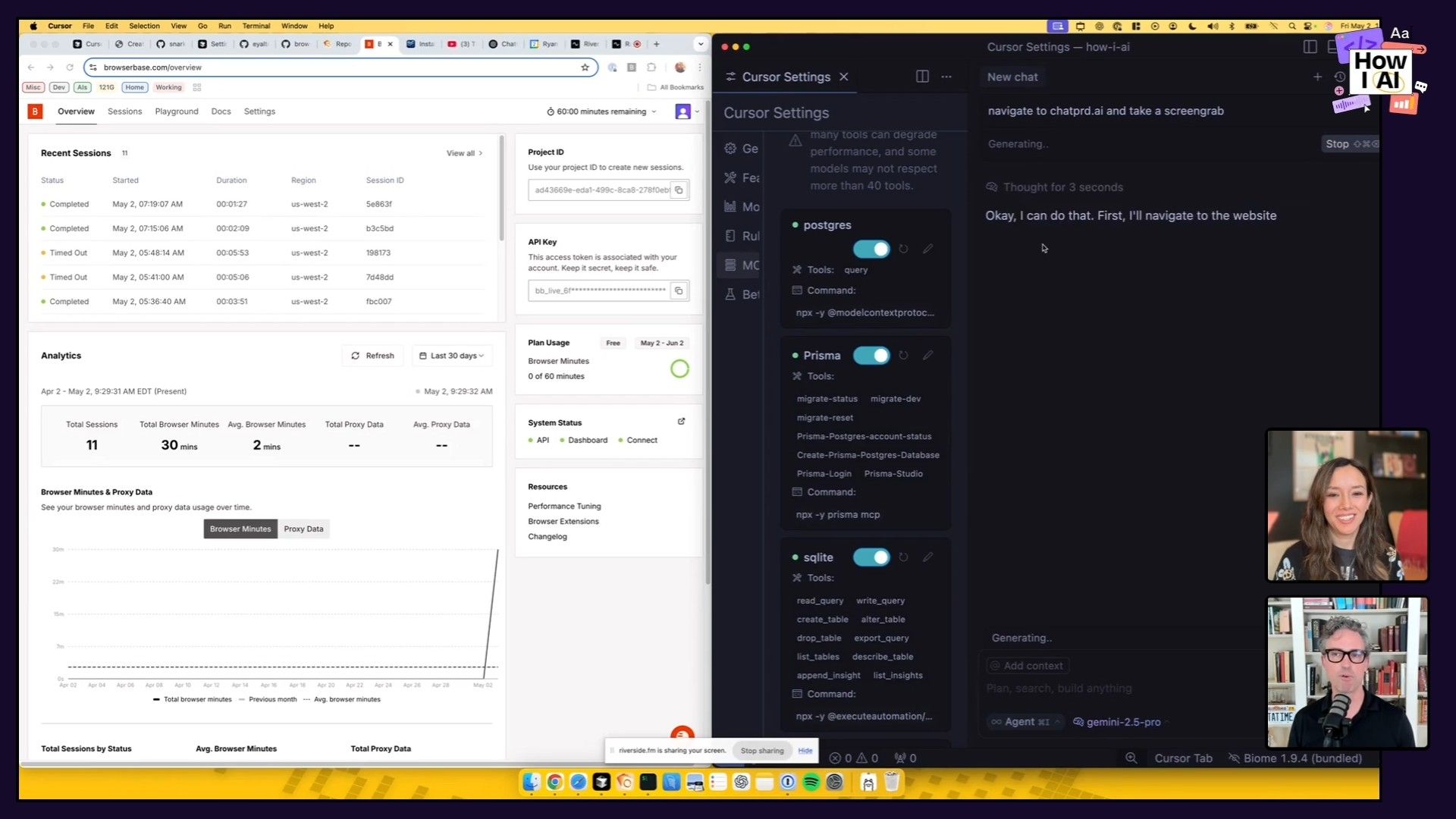

Workflow 2: Extending Cursor with Machine Control Protocols (MCPs)

Modern development work happens all over the place. We're constantly bouncing between our IDE, a browser, a database client, and project management tools. Ryan's second workflow uses Machine Control Protocols (MCPs) to control these external tools directly from Cursor, which cuts down on busywork and context switching.

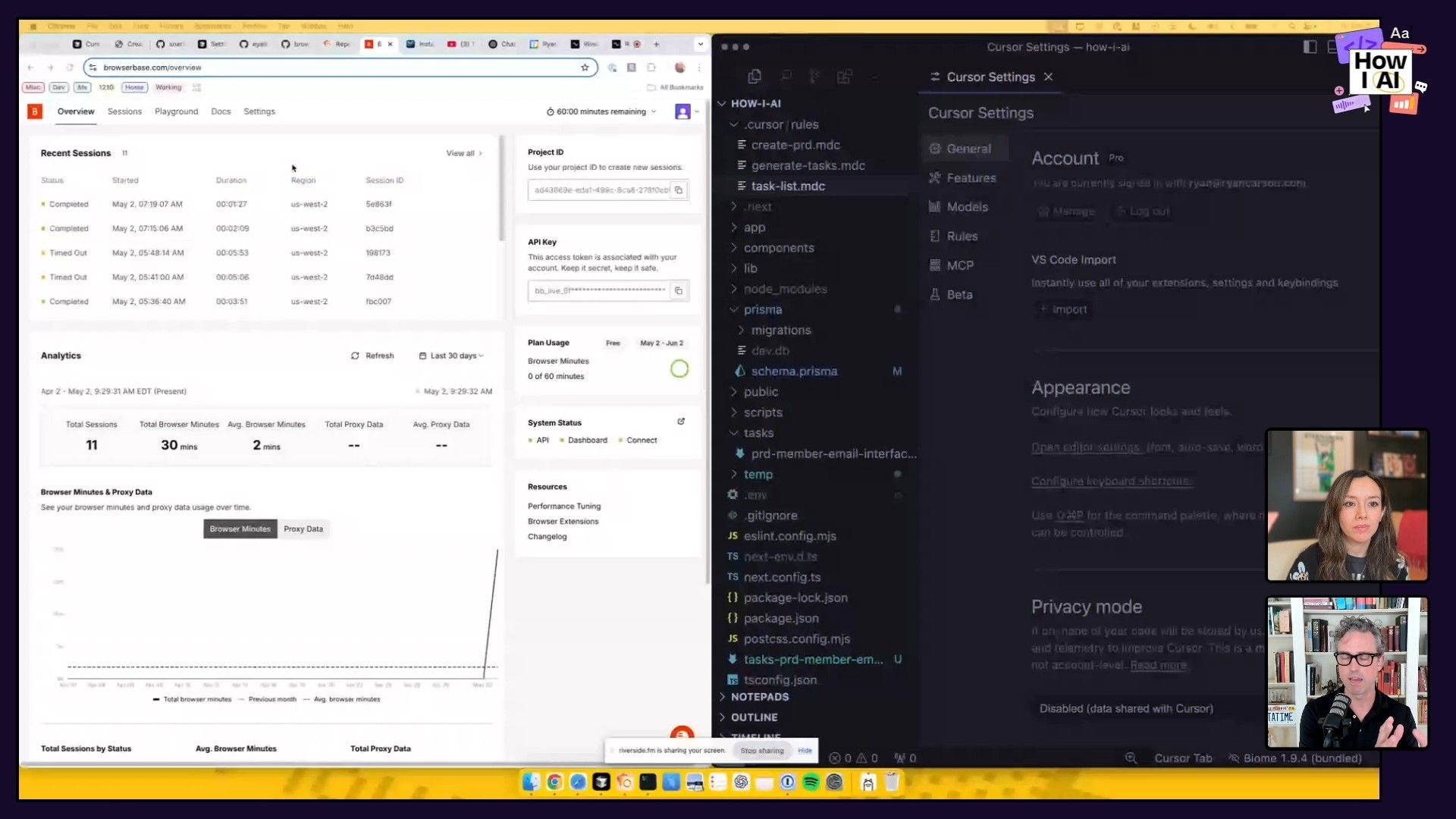

An MCP is basically an API that lets an LLM safely interact with other software. Ryan showed us how this works using Browserbase, a tool that gives you a headless browser in the cloud.

Step-by-Step: Automating Browser Actions

- Configure MCPs in Cursor: In Cursor's settings, you can add various MCP servers. Ryan has several set up, including Browserbase for web automation, Postgres for database queries, and Stagehand.

- Issue a Natural Language Command: In a Cursor chat window, Ryan just tells the AI what he wants to do in the browser.

navigate to chat p and take a screen grab- Observe the Result: The AI uses the Browserbase MCP to control the headless browser. We watched as the browser instance navigated to the ChatGPT website and took a screenshot, all from a simple command inside the editor.

This example is simple, but the possibilities are huge. This workflow lets you automate front-end testing, run database queries ("use the Postgres tool and tell me if this value is in the database"), and interact with other apps without ever leaving your IDE. The goal is to bring your entire workflow into a single interface driven by language.

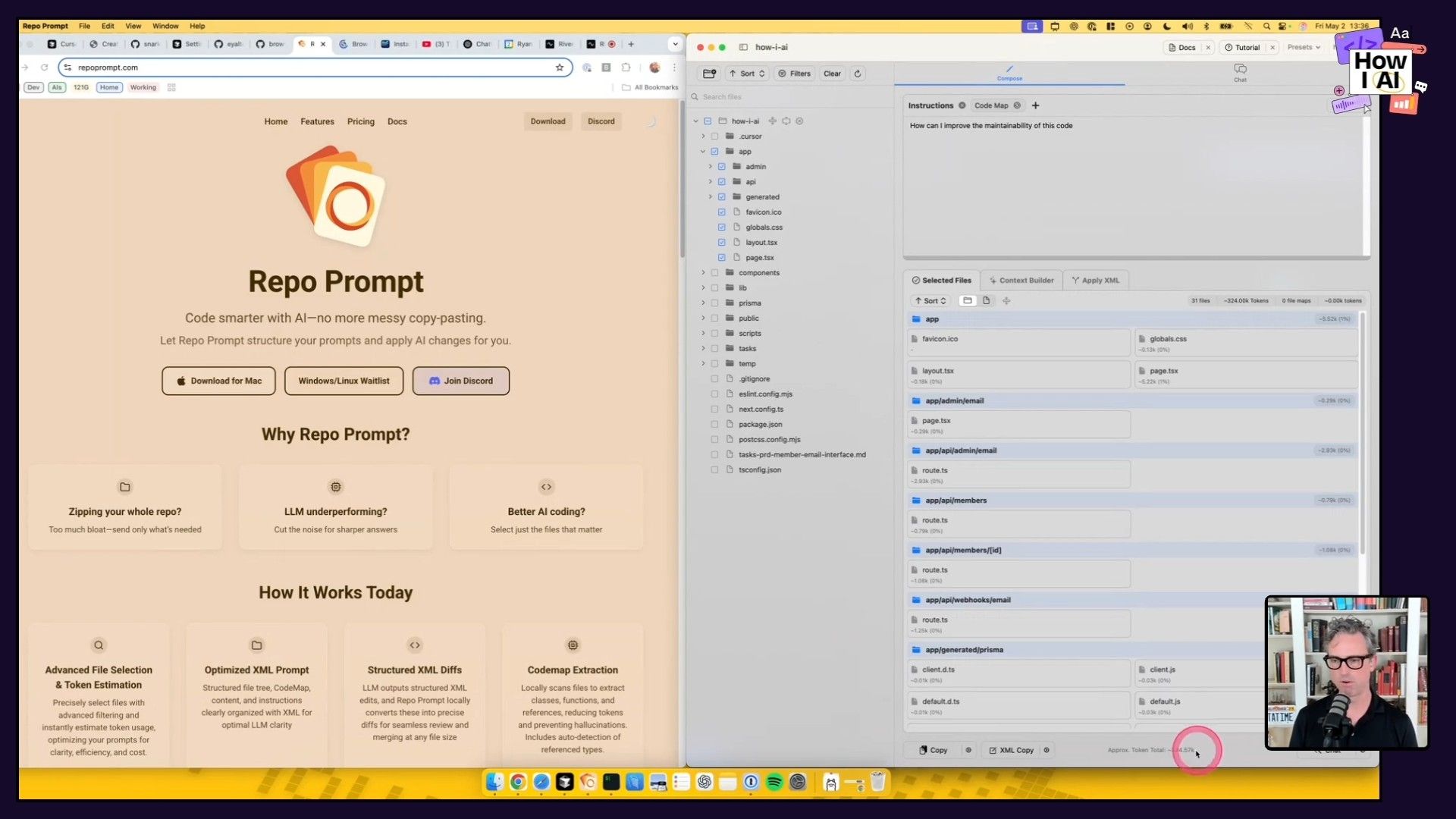

Workflow 3: Mastering Context with Repo Prompt

While Cursor does a lot of work behind the scenes to manage context, sometimes you need very specific control. For bigger, more complex jobs, Ryan turns to a tool called Repo Prompt to make sure the LLM has the exact context it needs—and nothing else.

Step-by-Step: Building the Perfect Prompt

- Select Specific Files: Ryan opens his project folder in the Repo Prompt desktop app. Instead of just dumping the whole repository on the AI, he carefully selects only the files relevant to his question—maybe a few specific components, a library file, and the

prisma.schemafile.

- Compose the Query: In the UI, he writes his prompt. He also adds one of Repo Prompt's built-in "stored prompts," like an "Architect" persona, which gives the AI extra instructions on how to think about the problem.

how can I improve the maintainability of this code?- Generate and Copy the Full Context: Repo Prompt then combines the file contents, the user prompt, and the persona instructions into a single, perfectly formatted prompt for the LLM. It uses XML tags like

<file_contents>and<user_instruction>to clearly separate each piece of information. - Paste into the LLM: He copies this huge, context-rich prompt and pastes it directly into his preferred LLM, like Claude or Gemini 2.5 Pro. Because the context is so precise and well-structured, the quality of the answer is much higher.

This workflow is perfect for tricky refactors, architectural reviews, or any task where giving the AI an incomplete or confusing context would send it down the wrong path. It replaces the "black box" of automatic context with the "glass box" of deliberate, precise control.

Conclusion: Structure is the New Superpower

What I love about Ryan's approach is how it turns AI-assisted development from a fun experiment into a professional way of building software. The common thread in all three workflows is a deep respect for context. Whether it's a PRD to guide feature development, an MCP to talk to another tool, or a hand-picked set of files from Repo Prompt, giving the AI clear and unambiguous context is everything.

As Ryan said, he feels he can now build an entire company by himself. It's not because he can do every job perfectly, but because these tools let him perform all the necessary roles of a product manager, CTO, and engineer. The startup playbook is being completely rewritten.

The next time you find yourself just "vibing" and getting stuck, try taking a page from Ryan's book. Slow down, define your requirements, structure your tasks, and give your AI partner the context it needs to succeed. You'll be amazed at how much faster you can build.

***

A huge thank you to our sponsors for making this episode possible:

Episode Links

Try These Workflows

Step-by-step guides extracted from this episode.

How to Build a Perfectly-Contextualized Prompt for Complex Code Analysis with Repo Prompt

Use Repo Prompt to gain surgical control over your AI's context. This workflow shows you how to manually select specific code files, combine them with a detailed prompt and a persona, and generate a highly-contextualized input for an LLM like Claude or Gemini.

How to Automate Browser Actions and External Tools from Cursor using MCPs

Learn how to configure MCPs in Cursor to control external tools like a headless browser or a database directly from your IDE using natural language commands, effectively reducing context switching and manual work.

How to Turn a Feature Idea into Executable AI-Generated Tasks in Cursor

This workflow details a structured, three-step process using custom rule files in Cursor to guide an AI from a high-level feature idea to a detailed PRD, then to a complete task list, and finally through systematic execution of each task.