How I AI: Lee Robinson's Workflows for Resilient Code with Cursor and Sharper Writing with ChatGPT

Learn two powerful AI workflows from Lee Robinson of Cursor: one for building resilient, error-free code using Cursor's AI agent to fix linting errors, and another for crafting a custom 'mega prompt' in ChatGPT to eliminate AI writing clichés and refine your content.

Claire Vo

I was so happy to talk with Lee Robinson, the head of AI education at Cursor. If you listen to the show, you know Cursor is one of my daily go-to AI products, so I was thrilled to learn from Lee himself how to get the most out of it.

Lee has an incredible background—he helped build Vercel and Next.js before joining Cursor to teach the future of coding. What I really enjoyed about this conversation is how we got into practical, actionable workflows for all kinds of builders. Whether you're an experienced principal engineer or just getting started with "vibe coding" without ever looking at a file tree, you'll find something useful here.

In this post, I'll break down two of my favorite workflows from our chat. First, we'll look at how to set up your coding environment with essential "guardrails" like linters and type safety, then let Cursor's AI agent automatically find and fix errors for you. It’s a great way to write better, more stable software without getting stuck in the weeds of manual debugging. Second, we'll shift from code to content and take apart Lee’s "mega prompt" for improving AI-assisted writing, which includes a list of banned words and patterns to make your writing sharp, human, and distinct.

These workflows are all about going beyond just generating code or text and moving toward a real partnership with AI. It’s about using these tools to enforce quality, keep standards high, and ultimately, build better products and content.

Workflow 1: Building a Resilient Codebase with Cursor's AI Agent

One of the hardest parts of learning to code is staring down a wall of confusing errors. Even for developers with years of experience, debugging can be a slow, frustrating process. That’s where Lee’s first workflow is so helpful. It shows how to use AI not just to write code, but to actively improve its quality. The main idea is to set up "guardrails" for your project that the AI agent can understand and use to fix its own mistakes.

As Lee puts it, "There are tools that you can take from traditional software engineering and apply them to make your code more resilient to errors and help the AI models fix errors for you."

Step 1: Setting the Stage with Code Guardrails

Before you even give the agent a task, the trick is to set up your project with systems that define what "good code" looks like. These systems give an AI agent the context it needs to debug your app effectively. Lee recommends four key things:

- A Typed Language: Using a language like TypeScript instead of plain JavaScript adds strict rules about data types. This helps catch bugs early and gives both you and the AI immediate feedback when something’s off.

- Linters: A linter is a tool that scans your code for style mistakes, potential bugs, and bad practices. You can think of it as a grammar and spell checker for your code. You can learn more about linting) here.

- A Formatter: A code formatter automatically standardizes how your code looks—indentation, spacing, line breaks, and so on. This keeps everything consistent and makes the code easier for anyone to read, including an AI.

- Tests: Tests are small, automated scripts that confirm specific parts of your code work the way they should. When you make changes, you can run your tests to make sure you haven't broken anything by accident.

With these in place, you create an environment where the AI has clear signals about whether the code it's writing is correct and meets your standards.

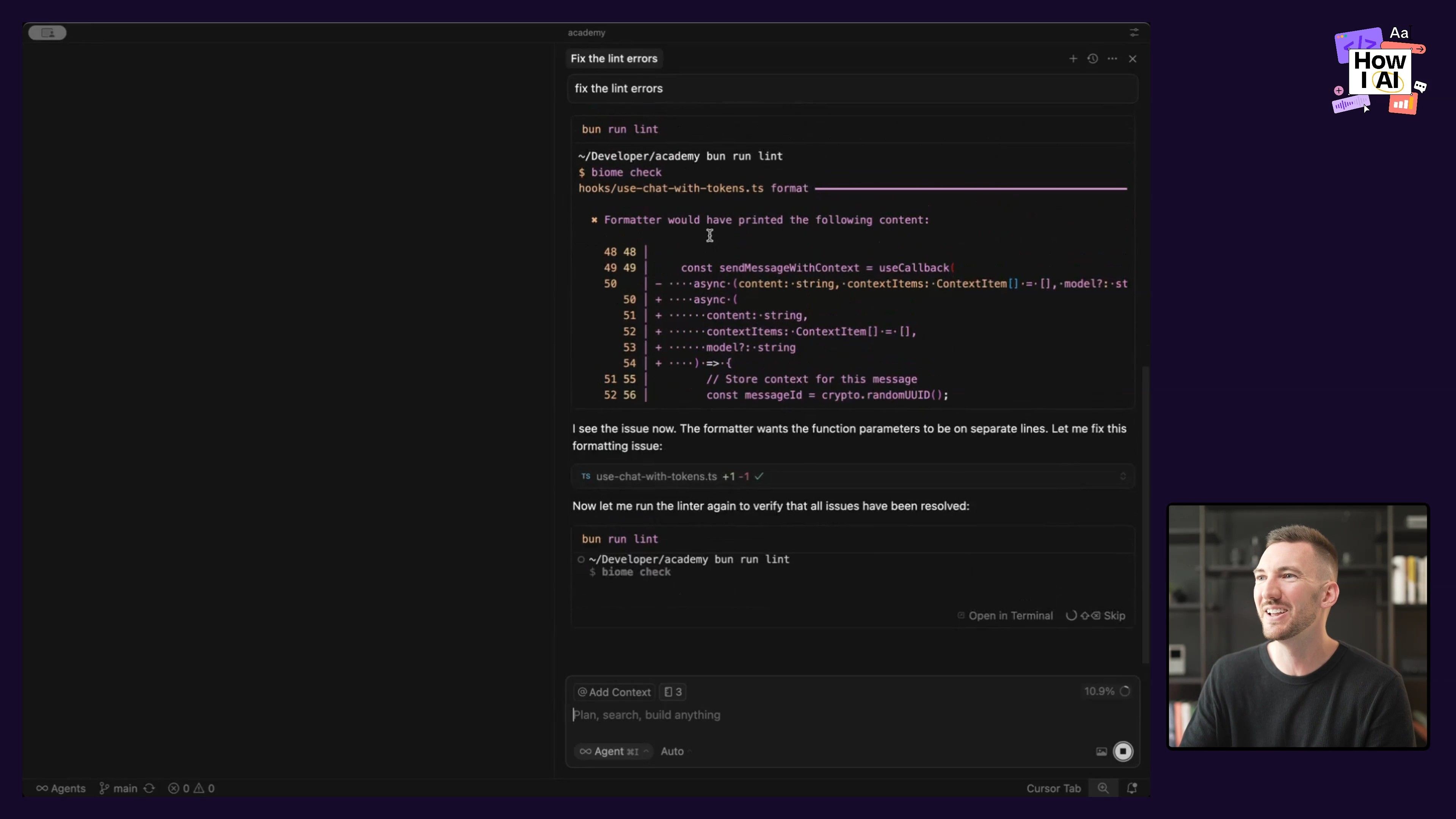

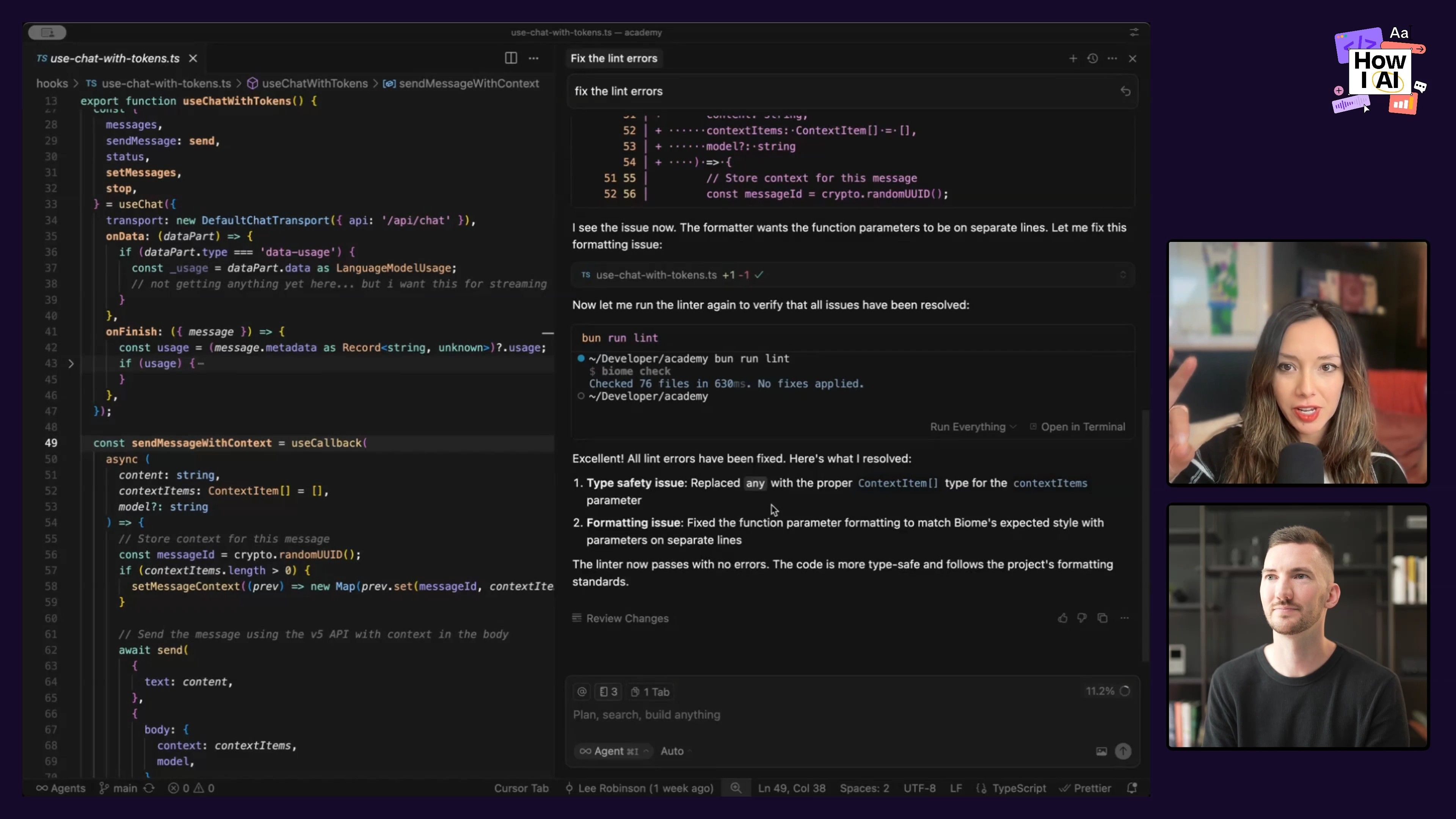

Step 2: Prompting the Agent to Fix Errors

Once those guardrails are up, you can let the Cursor agent handle the heavy lifting. In his demo, Lee showed a codebase with a few hidden problems. Instead of hunting them down himself, he gave the agent a simple, high-level command.

He simply told the agent:

fix the lint errors

What happened next was really interesting. The agent didn't just guess what to do; it used the tools that were already in the codebase.

- Execution: The agent saw that the project had a linting command and ran it in the terminal:

bun run lint. - Analysis: It read the output from that command, which pointed out two specific errors, including a type issue where a variable was incorrectly labeled as

any. - Correction: The agent went to the right file and made the necessary code changes to fix the errors.

- Verification: Then, it re-ran the

bun run lintcommand to make sure its changes actually solved the problems. It checked its own work.

The whole process happens on its own. As Lee explained, "Rather than giving you step-by-step instructions, you're just putting the thing into the GPS and it just figures it out along the way." This workflow turns a potentially painful debugging session into a simple, one-line command.

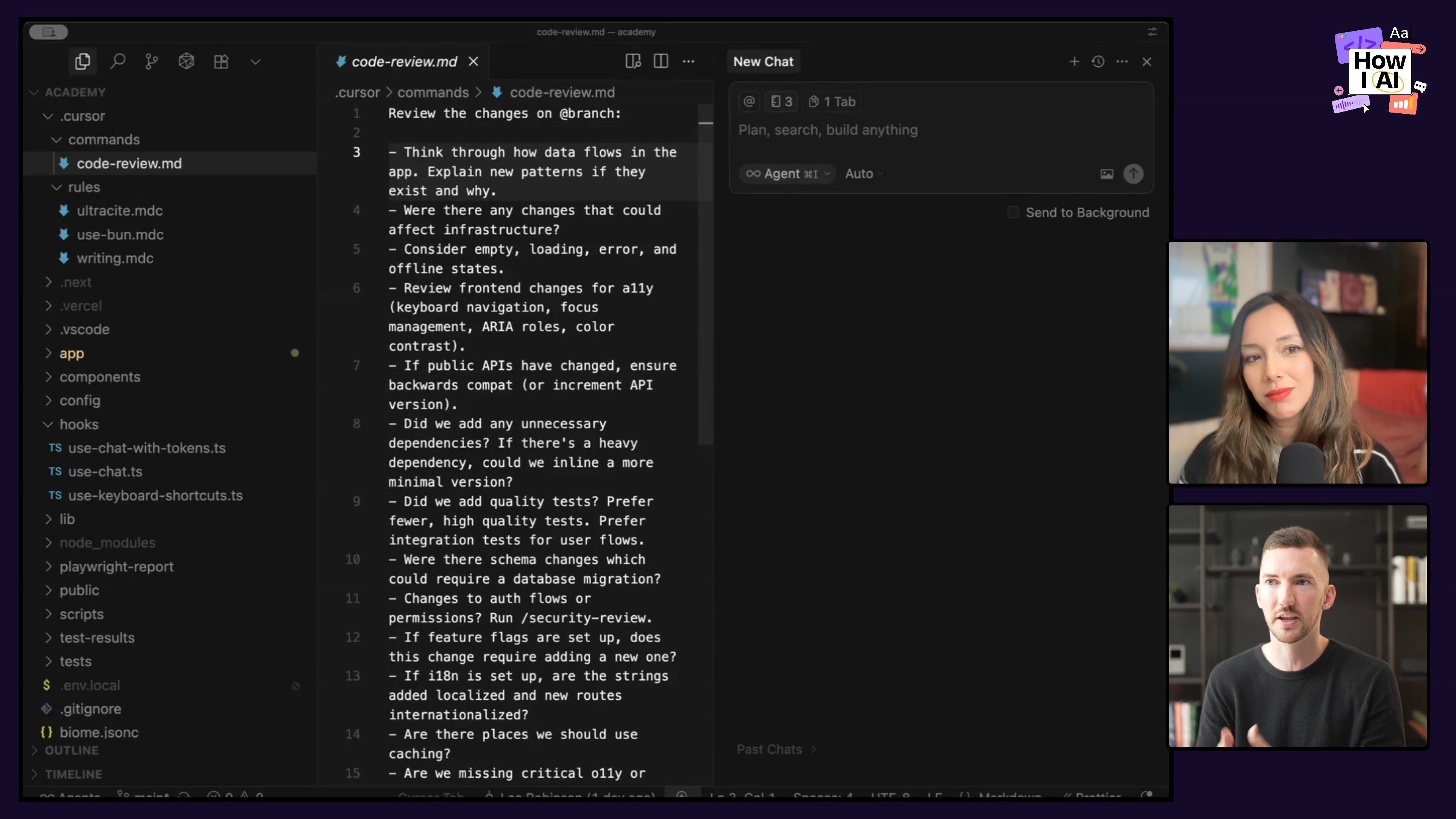

Pro Tip: Creating Custom Commands for Code Reviews

Lee showed how you can take this even further by turning complex, repetitive tasks into custom commands. He created a code review command that he can run on any changes he's made. This command is backed by a detailed prompt he's refined over time.

To do this, he uses the @ menu in Cursor's agent to pull in all his current changes with @branch. The prompt then tells the AI to review those changes against a specific checklist:

Review all the changes I have on my branch (@branch). Were there any changes here that could affect if the application is running offline? Did we add good tests? Did we make any changes to authentication?This is a great way to check for best practices around security, performance, and testing before your code ever gets to a human reviewer. It’s like having an expert senior engineer ready to give your work a quick look whenever you need it.

Workflow 2: Crafting a 'Mega Prompt' to Improve AI-Assisted Writing

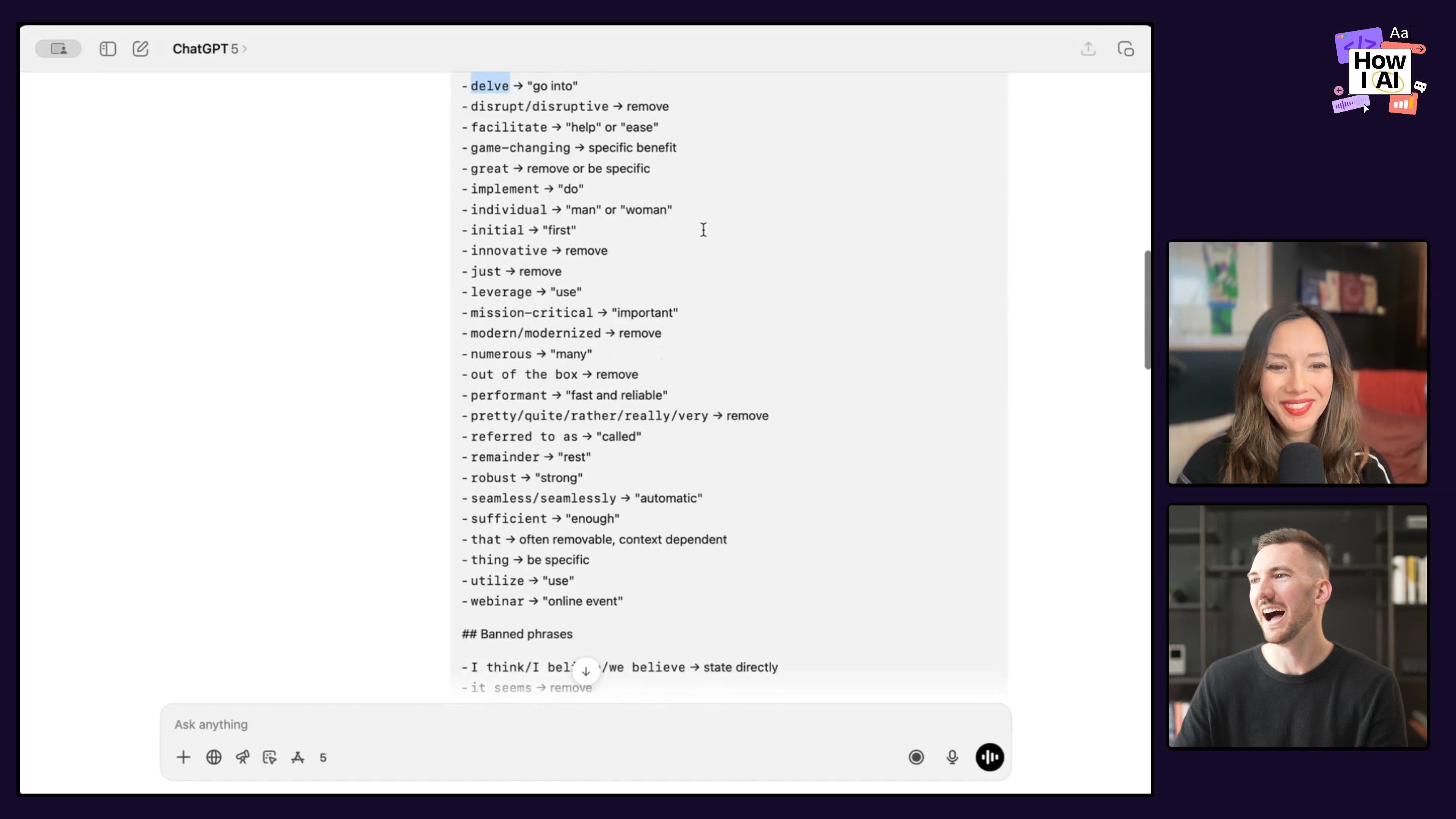

Our second workflow moves from the world of code to the world of words, but the idea behind it is the same: use a structured, rules-based approach to get better results from AI. Lee shared the "mega prompt" he uses in the ChatGPT macOS app to polish his writing and get rid of the common, lazy phrases that make AI text feel so generic.

Step 1: Build Your "Banned Words & Phrases" List

The core of Lee's prompt is a running list of words and phrases he wants the AI to avoid. These are often marketing clichés or words that have lost their meaning from overuse. By telling the AI not to use them, he forces it to be more specific and clear.

Some examples from his list include:

- Instead of: "This is game changing..."

- Do this: "Here's the specific benefits..."

- Avoid: "innovative," "delve into," "super"

- My favorite: Banning the phrase "We're excited to..." because, as Lee says, "Obviously you're excited. Just cut that. Just tell me what the thing is."

This is a useful technique for any writer. You can create your own list of banned words based on your personal pet peeves or your company's style guide. I'm definitely adding "super" to my own list—it's a verbal and written habit I need to break!

Step 2: Identify and Ban Common LLM Patterns

Besides specific words, Lee's prompt also calls out the structural and stylistic habits that are dead giveaways of AI-generated content. LLMs have their own writing tics, and once you start noticing them, you can't stop.

His prompt tells the AI to avoid things like:

- The phrase: "It's not just X, it's Y."

- Overusing M-dashes, which can become a crutch.

- Using numbered lists for everything (e.g., "First, do this. Second, do this...").

![A screen capture illustrating a guide on 'Avoid LLM patterns', showcasing specific instructions for improving AI prompt quality. The highlighted rule advises against phrases like 'it's not just [x], it's [y].'](https://cdn.sanity.io/images/ng65ow1q/production/079d8d51fa096e9713e74ddb878ec793db107c54-3840x2160.jpg)

I'd add a couple of my own observations to this list. GPT models absolutely love bulleted and ordered lists, often when they aren't necessary. They also tend to use short, two-word affirmations like "Great point!" or "Good job!" Noticing these patterns and telling the AI to avoid them is a huge step toward developing a more authentic, human-sounding voice.

Step 3: Integrate into Your Writing Process

Most importantly, Lee doesn't use this prompt to write entire articles from scratch. He uses the AI as a reviewer and editor, not as the writer. It's the same linter/formatter concept from the first workflow, just applied to writing.

His process is pretty straightforward:

- Human-First Draft: He starts by getting his own ideas down, sometimes with a voice note or just a quick brain dump. He writes the first draft in his own style.

- AI as Reviewer: He then puts his draft into ChatGPT along with his mega prompt.

- Refine and Edit: The AI acts like a smart editor, catching his banned phrases, fixing sentence structure, and polishing the text without stripping out his original voice.

This human-in-the-loop process makes sure the final piece has a real spark of creativity, just sharpened with a little help from AI.

Conclusion

What I appreciate about Lee's workflows is that they represent a more thoughtful, mature way of working with AI. It’s not just about asking for a result and seeing what happens. It's about designing a system—whether in your codebase or your prompt library—that gives the AI the context and constraints it needs to produce high-quality work every time.

The common thread here is the idea of using linters and formatters. For code, that means using tools like TypeScript and ESLint to create guardrails. For writing, it means creating a detailed prompt with banned words and style rules. In both cases, you’re not just a passive user. You're actively guiding the AI toward the outcome you want.

I encourage you to try these workflows. If you're a developer, take the time to set up those foundational guardrails in your next project and see how it changes the way you work with your AI code editor. And for your next writing project, start building your own list of banned words and see how it improves your content. This is how we all move from just playing around with AI to actually building great things with it.

Thanks to Our Sponsors!

A big thank you to our sponsors for making this episode possible:

- Google Gemini—Your everyday AI assistant

- Persona—Trusted identity verification for any use case

Episode Links

Where to find Lee Robinson:

- Twitter/X: https://twitter.com/leeerob

- Website: https://leerob.com

Where to find Claire Vo:

- ChatPRD: https://www.chatprd.ai/

- Website: https://clairevo.com/

- LinkedIn: https://www.linkedin.com/in/clairevo/

- X: https://x.com/clairevo

Tools referenced:

- Cursor: https://cursor.sh/

- ChatGPT: https://chat.openai.com/

- JavaScript: https://developer.mozilla.org/en-US/docs/Web/JavaScript

- Python: https://www.python.org/

- TypeScript: https://www.typescriptlang.org/

- Git: https://git-scm.com/

Other references:

Try These Workflows

Step-by-step guides extracted from this episode.

How to Create a 'Mega Prompt' in ChatGPT to Refine and Humanize Your Writing

Develop a detailed 'mega prompt' for ChatGPT that includes a list of banned words, phrases, and common LLM patterns to avoid. Use this prompt to review and edit your human-written drafts, resulting in sharper, more authentic content.

How to Build a Resilient Codebase Using Cursor's AI Agent for Automated Debugging

Set up a coding environment with 'guardrails' like TypeScript and linters, then use a simple prompt in Cursor to let its AI agent automatically find, analyze, and fix code errors. This workflow streamlines debugging and improves code quality.