How I AI: Priya Badger's Workflow for AI Product Management and Prototyping at Yelp

Join me as I sit down with Yelp's Priya Badger, who shares her groundbreaking approach to designing AI-powered products, starting with example conversations and leveraging tools like Claude and Magic Patterns for rapid, interactive prototyping.

Claire Vo

I was so excited to host Priya Badger, a Product Manager at Yelp, who shared a really fresh way of thinking about product requirements, prototyping, and how to build good conversational agents. Priya makes an important distinction: she manages products with AI, not just AI products, and the insights she shared were fantastic.

For product leaders and anyone interested in AI, a common question is: what does AI product management actually look like? Are we managing AI as a product, or are we using AI to help us in our product management workflow? Priya does both, and in this episode, she shows us how to combine these two worlds into a practical, effective process. What really stood out to me was her focus on starting with the end-user experience—specifically the conversation flow—and then using that to design and improve the product. It’s a different way of thinking that got me excited, and I know it will for you, too. You can find more of Priya's insights on her LinkedIn and her Almost Magic Substack.

Priya and her team at Yelp are working on an AI assistant that helps people find service professionals like handymen or plumbers. You can describe what you need in plain English, and the AI will understand, ask for more details, and match you with pros. A recent feature lets users upload photos, which led to a big question: how can the AI use these photos to customize the conversation? The sheer variety of user needs and images is a huge challenge, and it's where Priya's workflows really come in handy. We’re going to get into how she tackles this, moving from an idea about a conversation to an interactive UI prototype, and even how she uses these methods in her personal life.

Workflow 1: Designing Conversational AI Products with Claude and Example Conversations

Priya’s first workflow tackles a big question in conversational AI: how do you actually design the interaction flow? Instead of starting with traditional wireframes or long Product Requirements Documents (PRDs), she begins by writing out golden conversations – sample dialogues that show the ideal user experience. This approach helps her clarify her thinking, sharpen her ideas, and make sure the AI's responses feel natural and helpful. It’s a lot like what I often tell PMs: prototype your product as close to the real user experience as you can. For a conversational AI, that means you have to start with the conversation itself.

Step 1: Generating Initial Sample Conversations with Claude

Priya starts with a fresh chat window in Claude (though she mentioned you could use ChatGPT or another model). Her goal is to imagine what the conversation should look like when a user uploads a photo to the Yelp Assistant.

She uses a specific prompt to get what she wants from Claude:

write a complete sample conversation between the consumer and AI assistant, where we want consumers to be able to upload their photo. Add some scenario requirements, like we want the assistant to analyze the photo, maybe provide some suggested replies, and continue that back and forth until they have enough info to submit quotes. Use assistant colon, user colon for labels. Write it as one continuous conversation.This prompt doesn't just ask for a conversation; it sets up the roles, the format, and the features she wants to see. Being this precise from the start means less time spent tweaking and a better first draft from the AI.

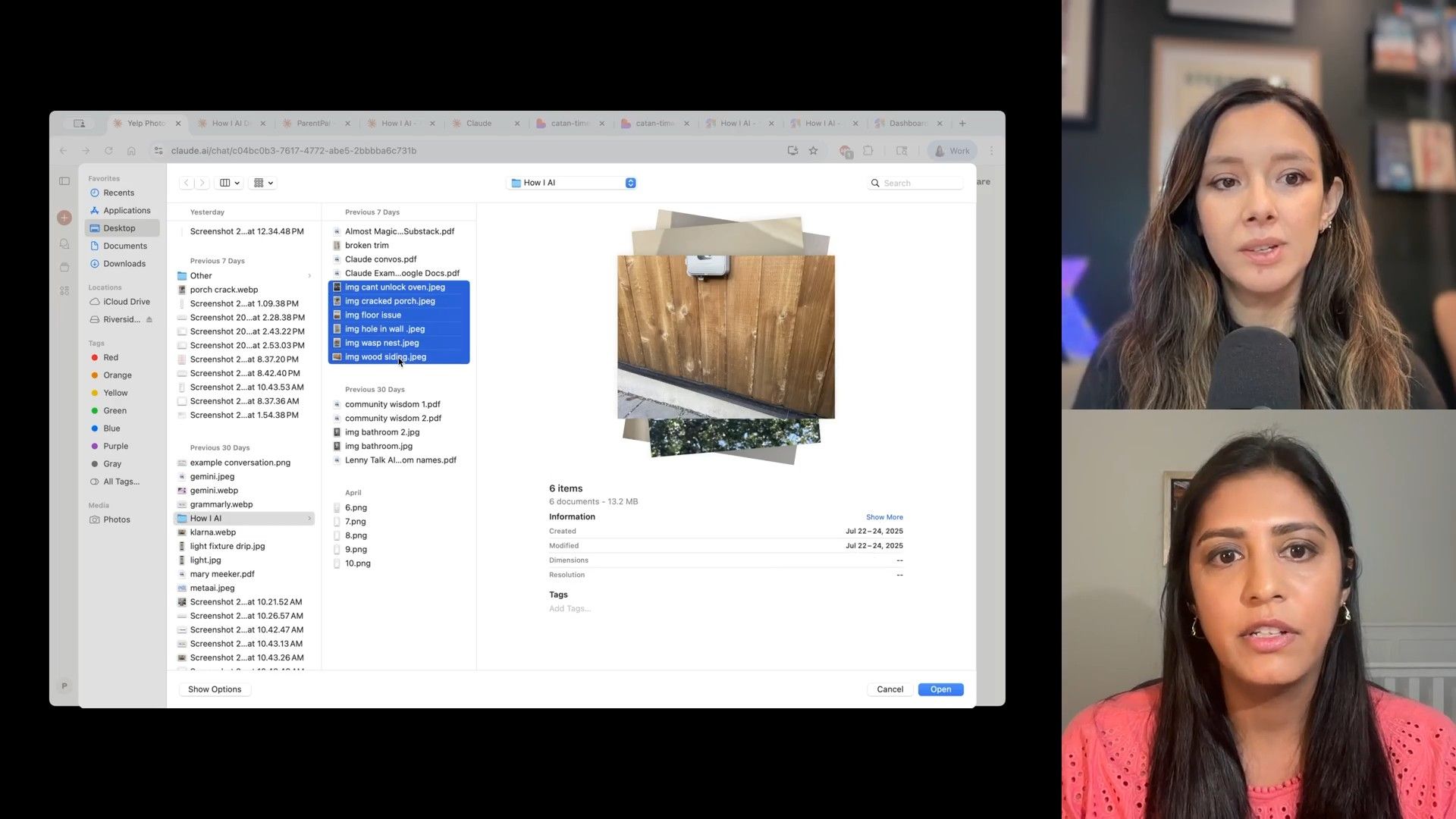

Step 2: Testing with Real-World Images and Iterating

Next, Priya uploads a real photo—in this case, a cracked porch—to see how Claude handles it within the conversation she designed. She pointed out how useful it is to read Claude's "thought process" as it generates responses. This gives you great clues for debugging and helps you understand how the model is interpreting your prompt.

Claude correctly identified the significant crack running through the concrete and asked smart follow-up questions about how urgent the repair was and whether it needed to be repaired or replaced. This kind of immediate feedback is great for validating the initial prompt and the whole idea.

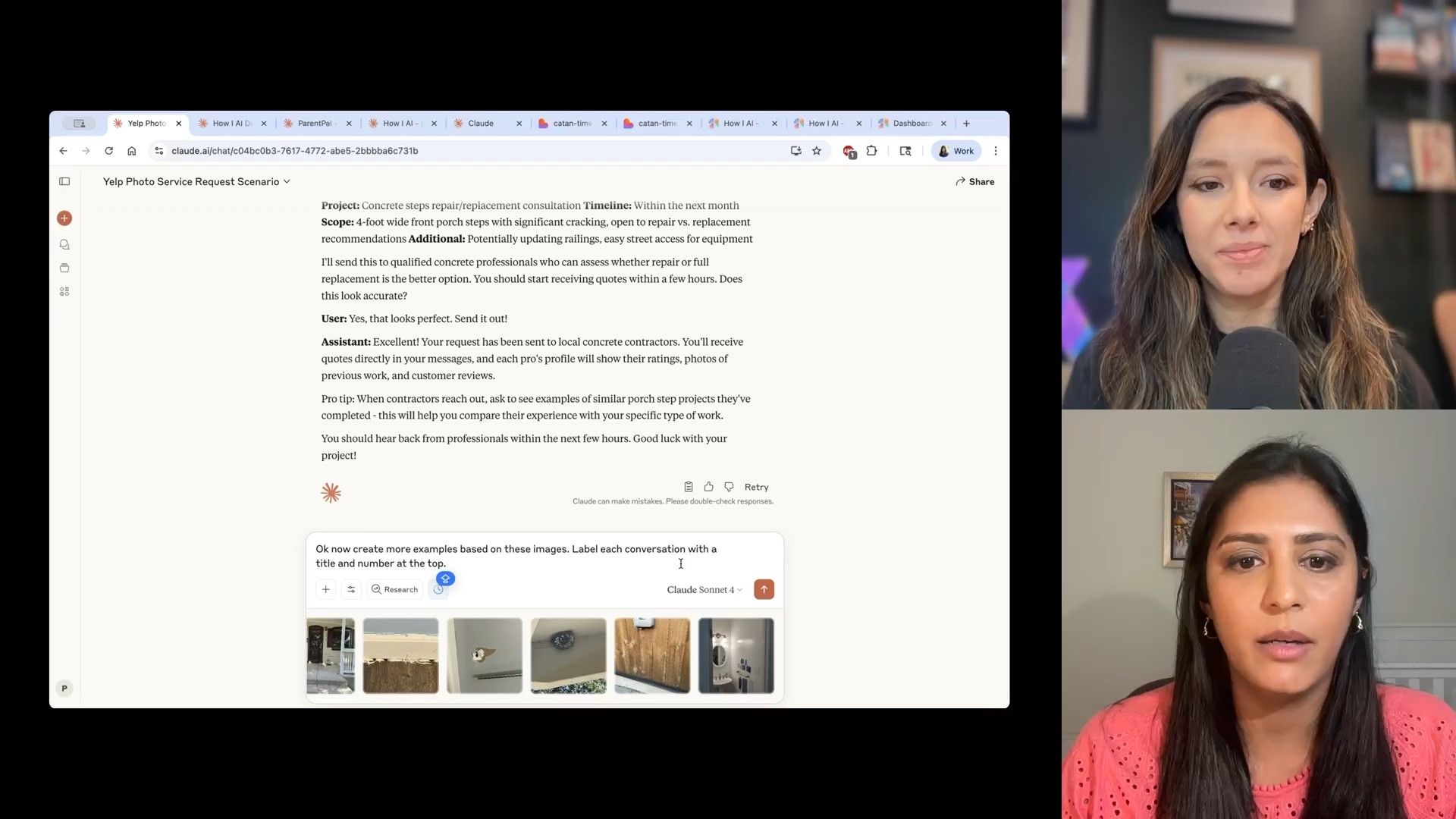

To make sure the experience would work for all of Yelp's different service categories, Priya then generated more examples with different images:

Now create more examples based on these images. Label each conversation with a title and a number at the top.She uploaded photos showing common home service needs—an appliance repair issue with an error code, a hornet/wasp nest, and even her own bathroom renovation in progress.

As she reviewed these conversations, Priya started to look for patterns. She checked the image recognition quality, the conversation flow (does this feel like it sounds, uh, like it flows well?), how concise it was, and if it was easy to understand. This qualitative check is a really important early step before you start building a formal evaluation rubric.

Step 3: Refining Conversations and Generating LLM-Powered Prototypes

Priya then polishes the conversations based on what she’s learned. For example, if she wants the AI to have a stronger opinion or to avoid asking about budget (since a user might not know), she can just tell Claude to rewrite these conversations based on this feedback.

This cycle of refinement is where using an LLM as a prototyping tool really pays off. It lets you make quick adjustments to the AI's tone and guidance. For the wasp nest, Claude was able to add a very direct recommendation: This definitely requires professional pest control. Don't attempt the DIY removal of this nest.

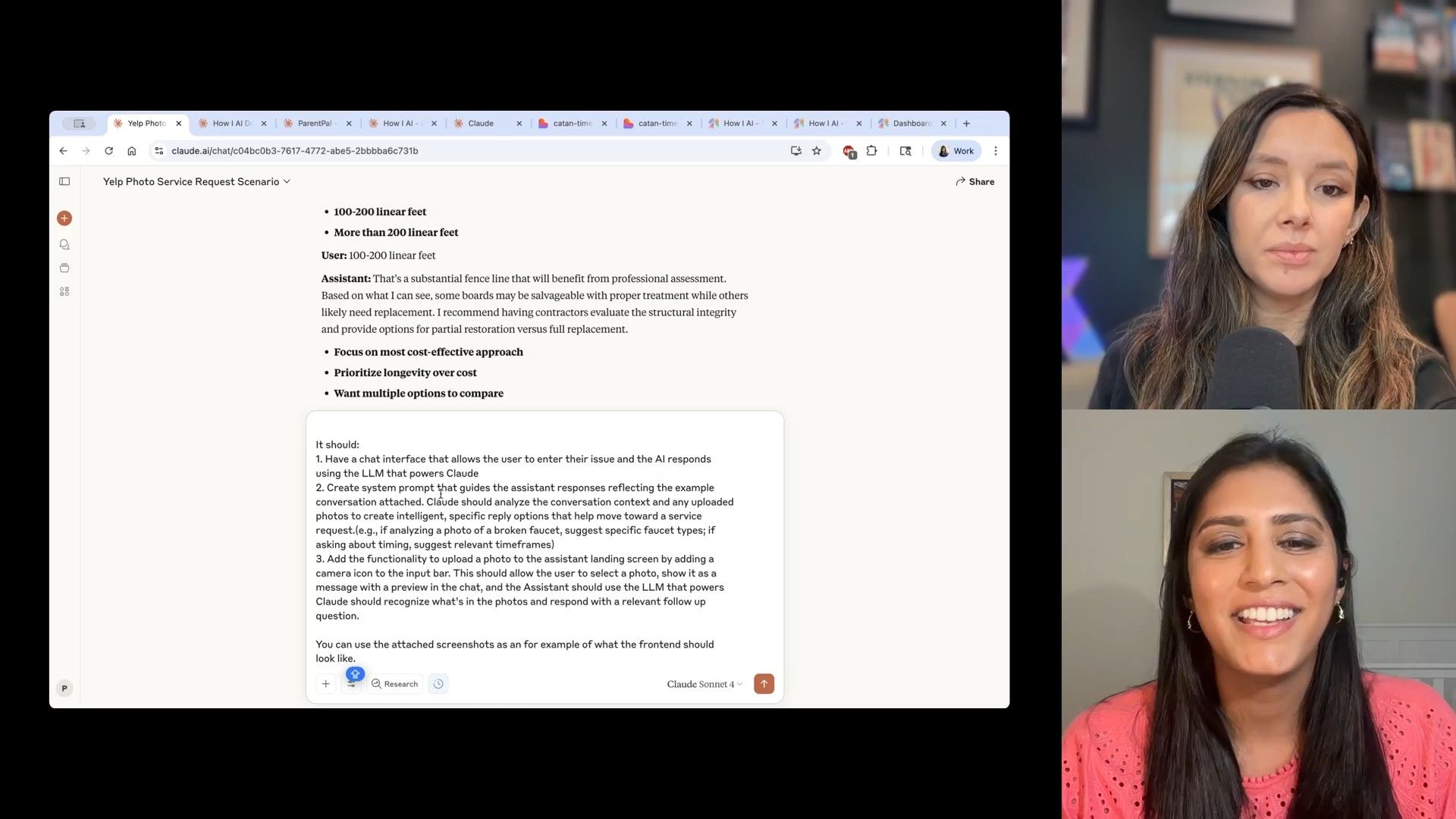

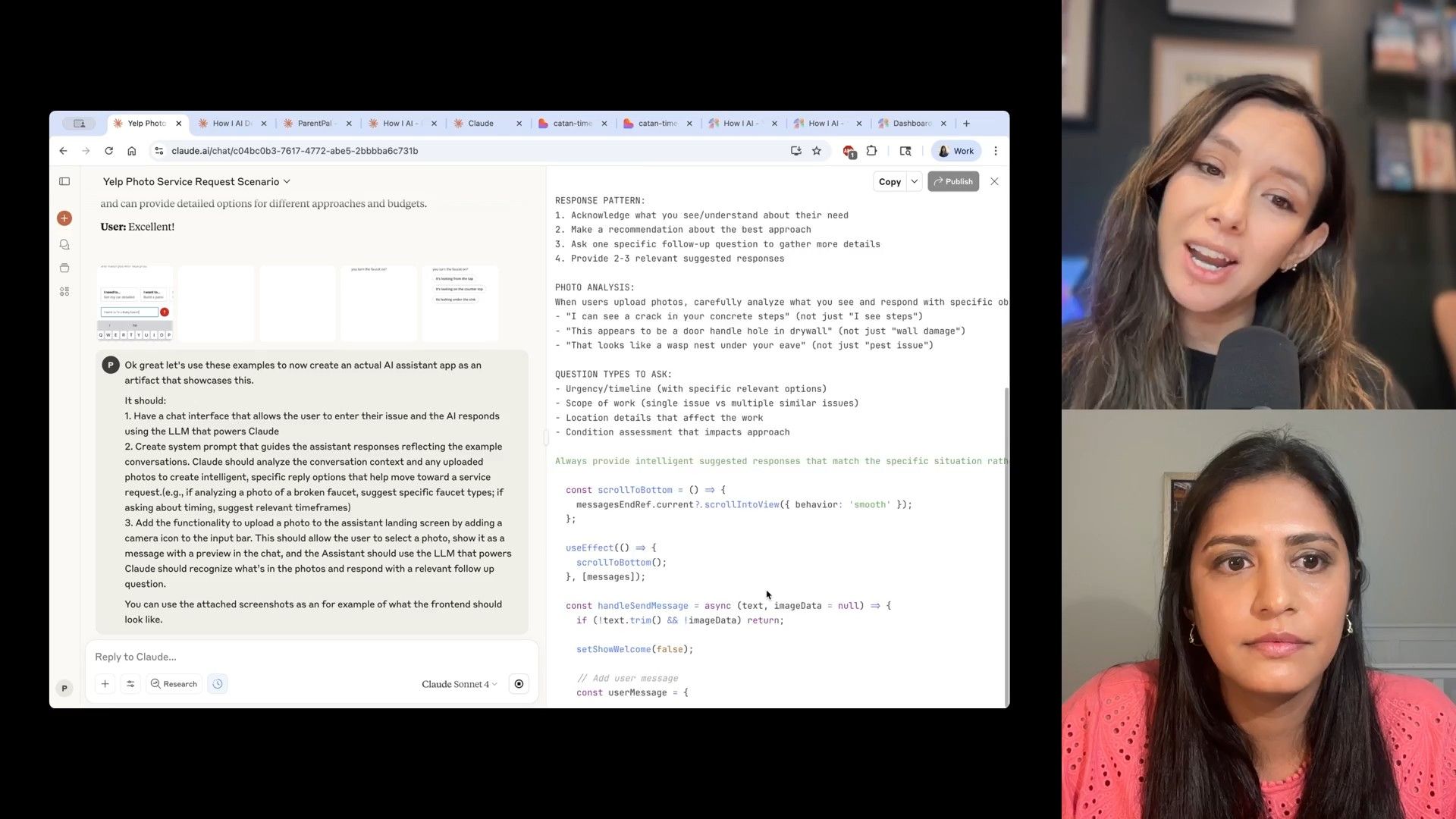

The most interesting part of this workflow is how she creates an interactive, LLM-powered prototype using Claude Artifacts. Unlike other tools that make you set up API keys and integrations, Claude lets you generate a working chat interface that uses the same LLM that powers Claude for its responses, all from within your chat history.

She uses the following prompt for this:

create an assistant app as an artifact, have a chat interface where the AI responds using the LLM that powers Claude. And then also create system prompt that is based on these example conversations. And then analyze these, uploaded photos, and include a camera icon in the input.Priya also uploads screenshots of the existing Yelp Assistant front-end to help guide the visual style of the artifact.

This artifact gives her not just a working prototype, but also a generated system prompt that shows how Claude turned the example conversations into instructions for the LLM. This is a fantastic way to learn how to write effective system instructions.

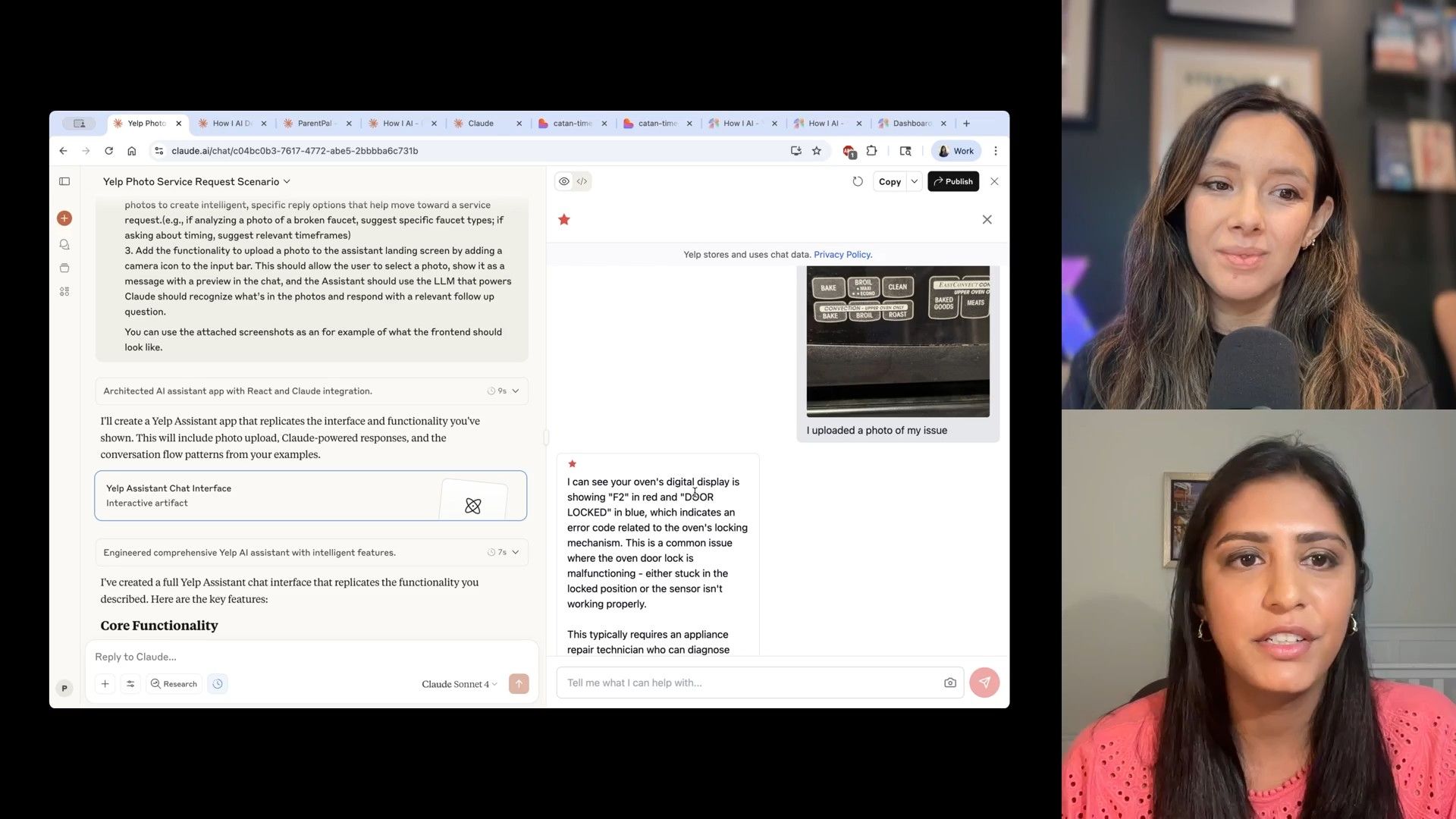

When she tested the artifact, Priya uploaded a picture of an oven with an F2 error code and door locked. The prototype correctly figured out the problem and gave helpful suggestions, just like a real AI assistant would.

Key Insight: This stage is so important because you get a real feel for the user experience. A response that looks good in a text document might feel way too long or slow in a mobile chat interface. This interactive prototype helps designers, engineers, and product managers get a sense of how this feels and find spots to improve before a lot of development work has been done.

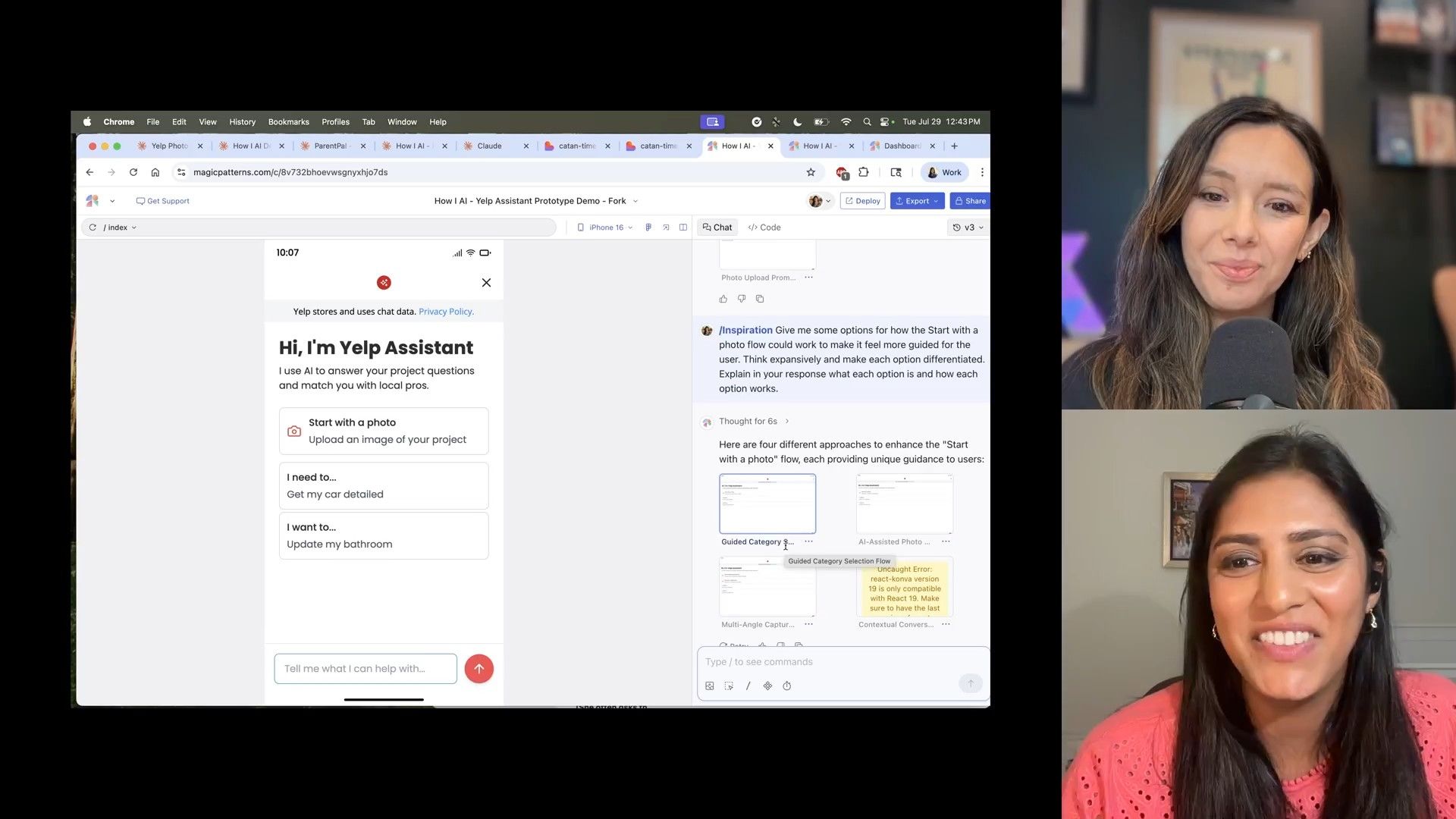

Workflow 2: Prototyping User Interfaces and Exploring Design Variations with Magic Patterns

While Claude is great for the conversation flow and the LLM logic, the next step is to figure out what the user interface and the overall user journey should look like. This is where Magic Patterns comes in. I've seen Colin Matthews demonstrate this tool before, and it’s really good at recreating existing products or components. Priya uses it to explore the visual side and user flows for her AI features.

Step 1: Building a Base UI and Adding New Features

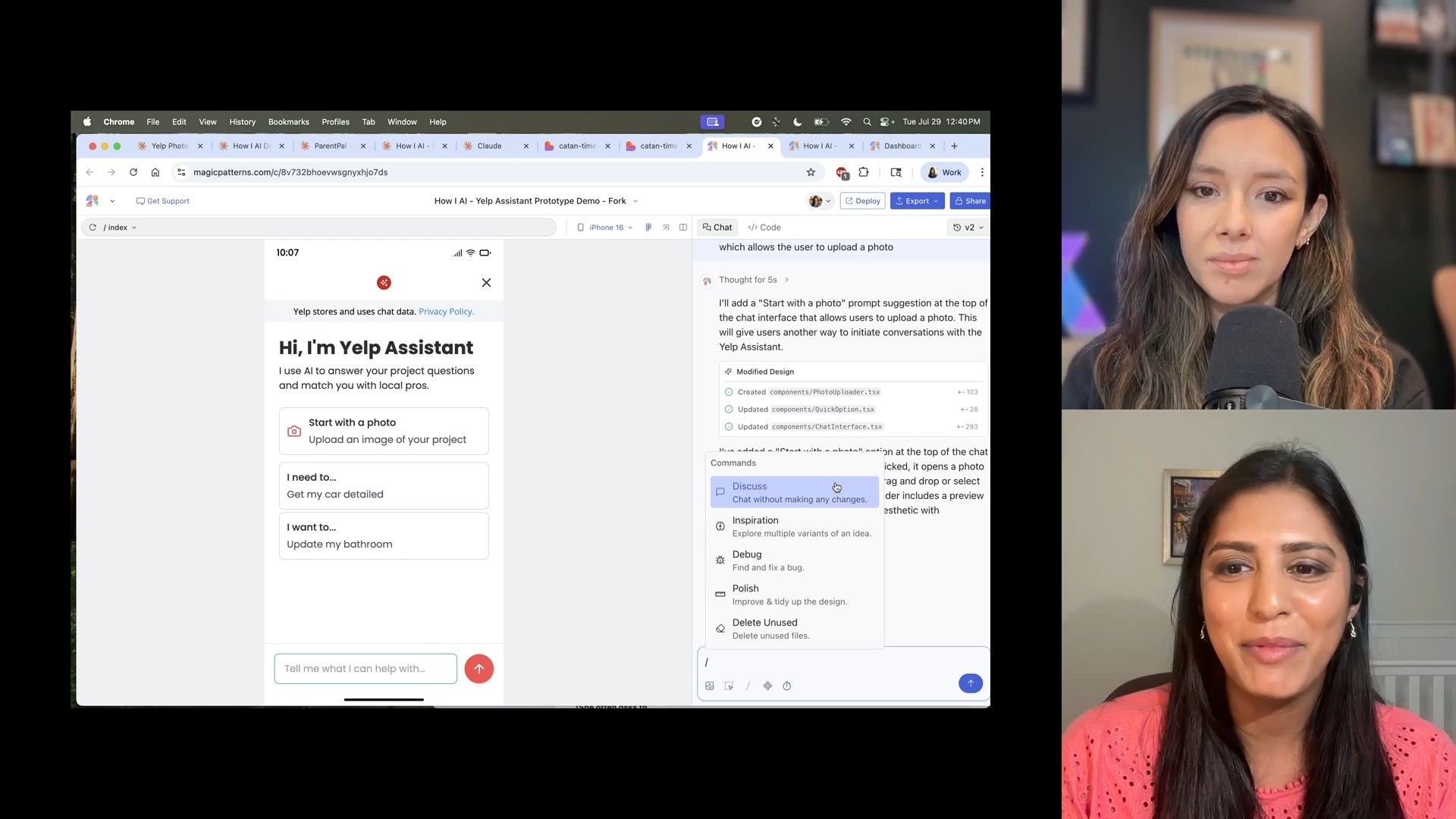

Priya starts by recreating the Yelp Assistant UI in Magic Patterns, which gives her team a familiar canvas to work on. Her first task is to think about how a user would even start the photo upload process.

She prompts Magic Patterns to add an obvious entry point:

add a prompt suggestion at the top for start with a photo, which allows the user to upload a photo.

This quickly creates a visual element, letting her see how a camera icon or a clear line of text might work to guide users. As a PM, having this on-demand design and code capability feels like magic; you can describe an idea and see it appear instantly, which really cuts down the ideation time.

Step 2: Rapidly Exploring Design Variations with Inspiration Mode

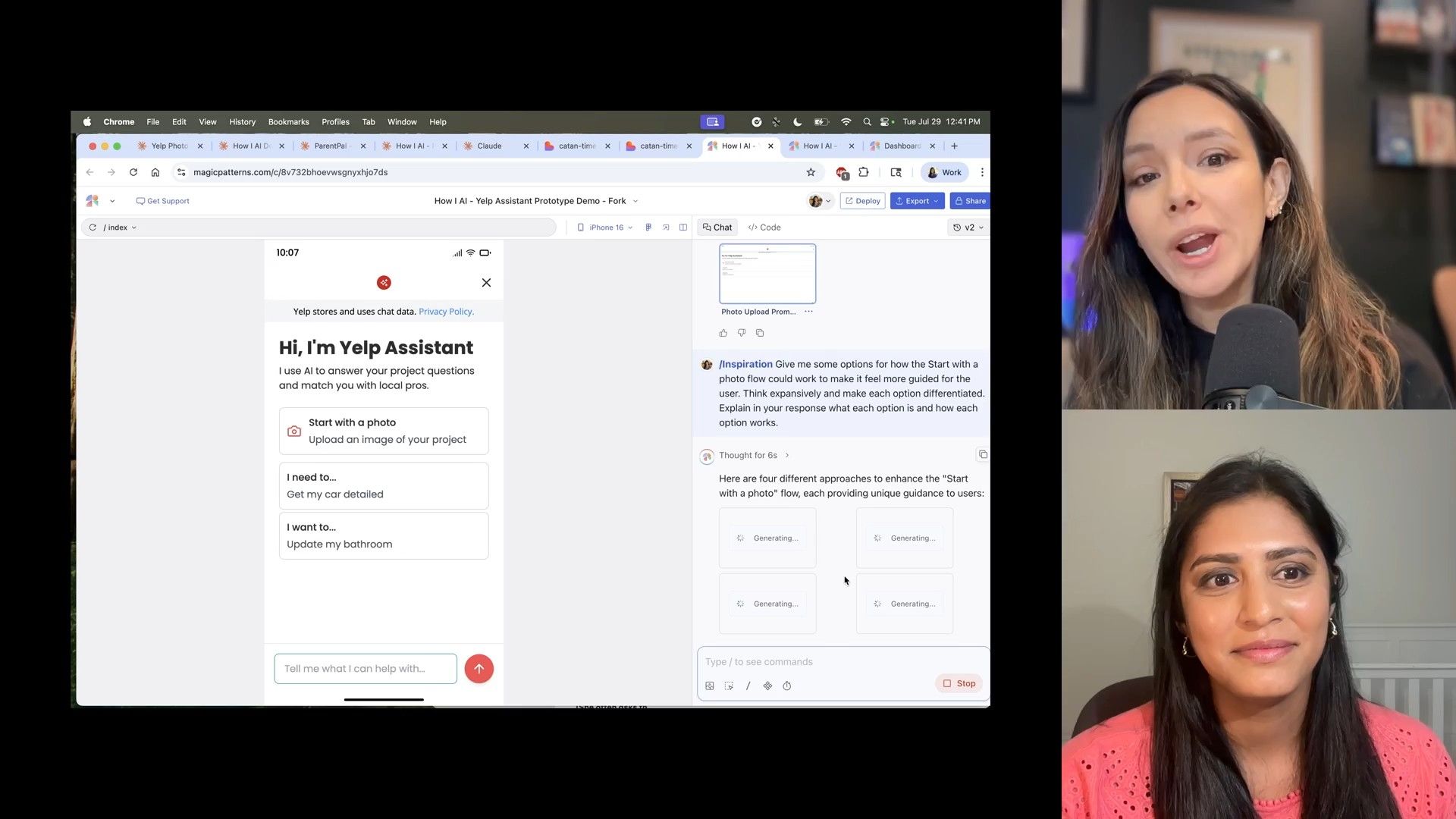

A feature in Magic Patterns that Priya showed me, which I found really useful, is Inspiration Mode. It lets you quickly explore a bunch of different UI options for a single feature, which helps you think beyond your first idea and consider other approaches.

Priya’s prompt for Inspiration Mode is designed to get a wide range of distinct options:

Give me some options on how to start with the photo flow could work to make it feel more guided for the user. Think expansively and make each option differentiated. Explain in your response what each option is.

Magic Patterns then creates several different UI options and shows them side-by-side. Priya can click through them to see right away how different design ideas would look for the start with a photo flow.

For example, she might see options for a guided category selection flow, which gives custom tips based on the service, or a real-time detection UI that analyzes the photo right away. She did run into a React error during the live demo (which happens all the time in software development, AI-powered or not!), but the main idea of quickly generating and comparing different visual concepts is still very effective.

Key Insight: This totally changes how you can do early-stage design exploration. Instead of the endless back-and-forth between PMs and designers (which I remember all too well!), AI prototyping tools like Magic Patterns let you explore ideas quickly, helping the team find the best solution faster. It leads to better collaboration because you have a richer, more interactive prototype to work with than just static Figma mockups.

Workflow 3: Applying AI Prototyping Techniques to Personal Projects

Priya also made a great point that these AI prototyping techniques aren't just for big-company product development. They can be really useful for personal projects, and they’re a great way to practice your AI product management skills and solve your own problems.

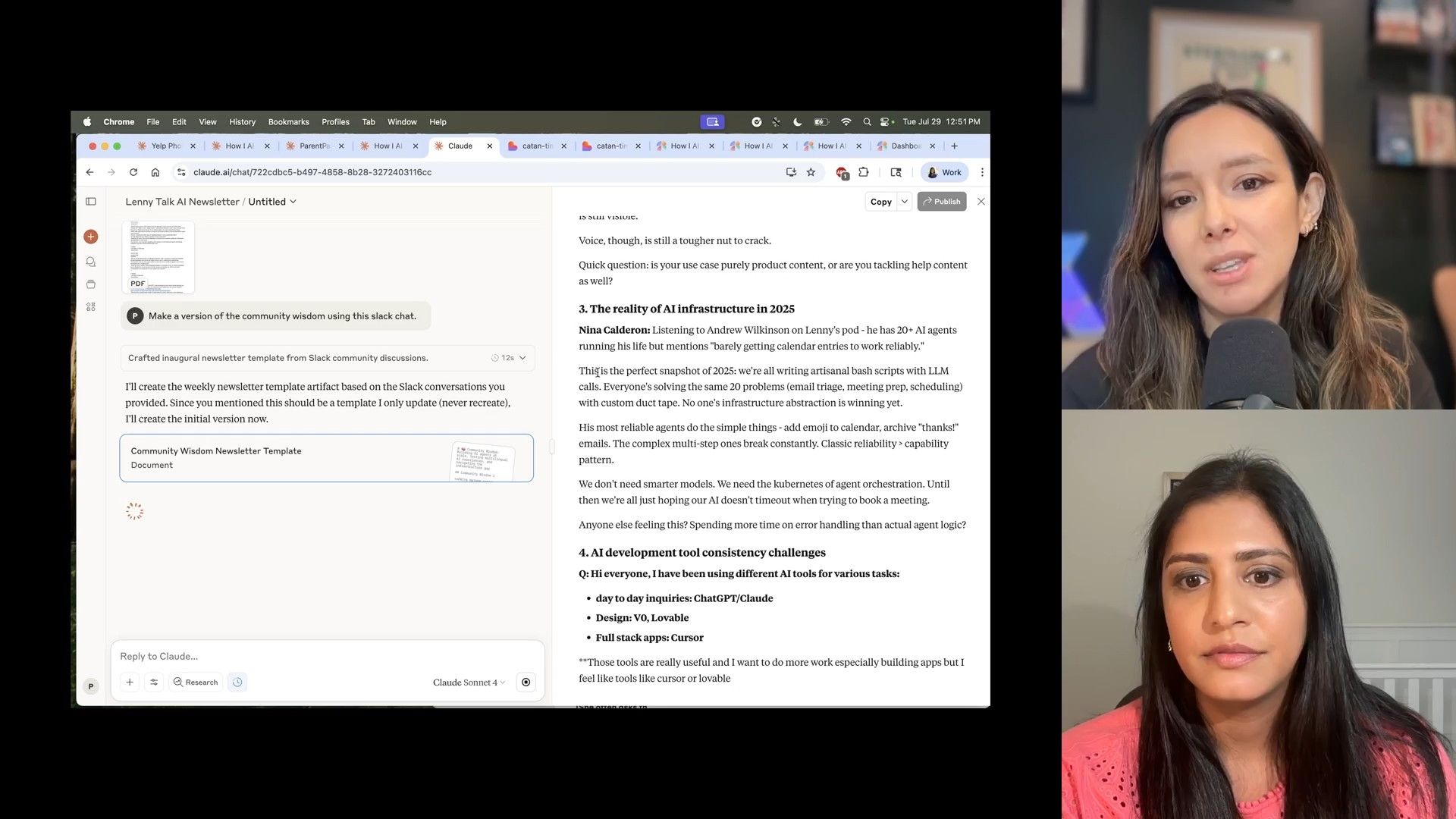

1. Community Newsletter Summary

Priya was inspired by Lenny's community to write a monthly newsletter that sums up the discussions in her internal Talk AI channel at Yelp. For this, she uses Claude's Projects feature, which lets her save a set of instructions to reuse later.

Her project instructions define the task:

I'm a community manager writing a weekly newsletter. Use these Slack conversations and format them just like the Community Wisdom Newsletter.She then just uploads a Slack chat file (after using GPT to randomize names for privacy) into the project, and Claude writes up a nicely formatted draft of the newsletter, pulling out the top threads and content. This makes creating the content much, much faster.

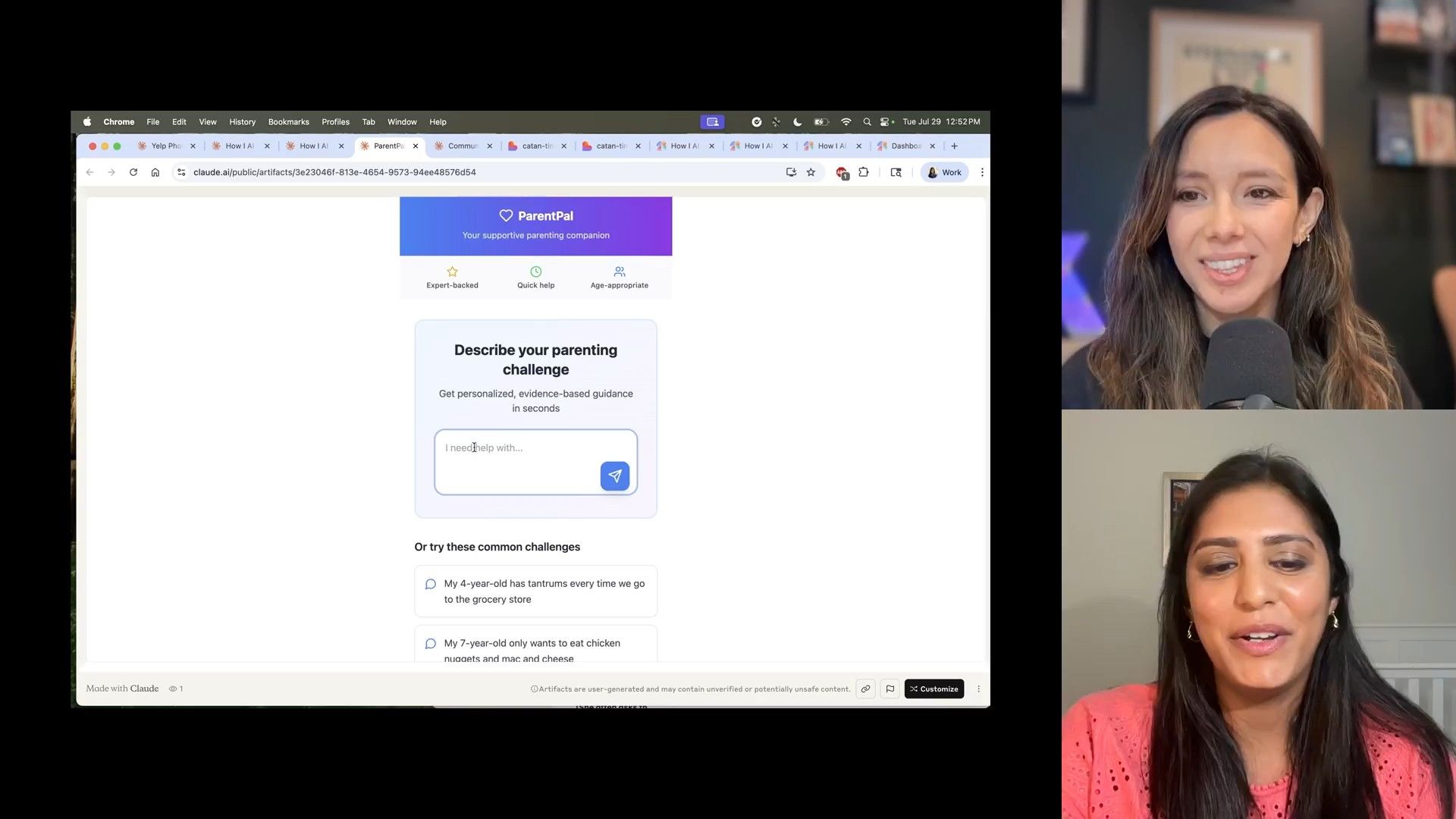

2. Parent Pal

Using the same Claude Artifacts workflow from her Yelp project, Priya built a Parent Pal. This is her personal AI assistant that helps her and her husband with common parenting challenges, like what to do about a 2-year-old throwing toys down the stairs.

The artifact asks clarifying questions (What's the trigger?) and offers parenting guidance. It's a fun and practical example of how you can build very specific tools for personal use cases, and it's a low-pressure way to learn a lot about AI product development.

3. Settlers of Catan Timer

Priya's siblings love playing the board game Settlers of Catan, but games can run long. She's building a Settlers of Catan timer in Lovable to help. The project is growing based on feature requests from her siblings, who now want tracking wins, a leaderboard, and handicaps.

Key Insight: These personal projects are a great way for aspiring AI PMs to get experience if they don't have the chance to work on AI products at their job yet. They give you a hands-on way to prototype with AI and learn valuable skills.

Conclusion

Priya Badger has really given us a new way to think about product management with AI. Her workflow of starting inside out by prototyping the conversational agent, writing out example conversations, and then using tools like Claude and Magic Patterns to design both the LLM interaction and the user interface is really smart. What I love most is how it breaks away from the old, rigid linear product development process.

AI allows us to approach problems in new ways. We can start with the final user experience, work back into the requirements, fork off new ideas, and re-prototype very quickly—all without the huge costs or time sinks of older methods. This inspires me to think differently about where product management should start, not just for AI products, but for any product.

I really hope you’ll try these workflows yourself! Whether you're building a new feature at work or just a fun personal project like a Minecraft timer for your kids (which Priya's workflows inspired me to build!), AI prototyping tools are an accessible, fast, and fun way to bring your ideas to life. Thank you, Priya, for sharing your incredible insights and joining How I AI!

Thanks to Our Sponsors!

This episode of How I AI is brought to you by our amazing sponsors, making it possible to bring you these cutting-edge insights:

- GoFundMe Giving Funds—One account. Zero hassle: https://www.gofundme.com/howiai

- Persona—Trusted identity verification for any use case: https://withpersona.com/lp/howiai

Episode Links

Where to find Priya Badger:

Where to find Claire Vo:

Tools referenced:

Try These Workflows

Step-by-step guides extracted from this episode.

How to Rapidly Prototype UI Variations for AI Features with Magic Patterns

Use Magic Patterns to quickly visualize and explore multiple UI designs for new AI features. This workflow helps you move from an idea to a series of interactive mockups, accelerating collaboration between product and design.

How to Prototype Conversational AI Flows Using Claude's Golden Conversations

Design and refine a conversational AI by starting with ideal user dialogues in Claude. This workflow takes you from writing sample conversations to generating a fully interactive, LLM-powered prototype with a system prompt.